Home » Posts tagged 'cloud computing'

Tag Archives: cloud computing

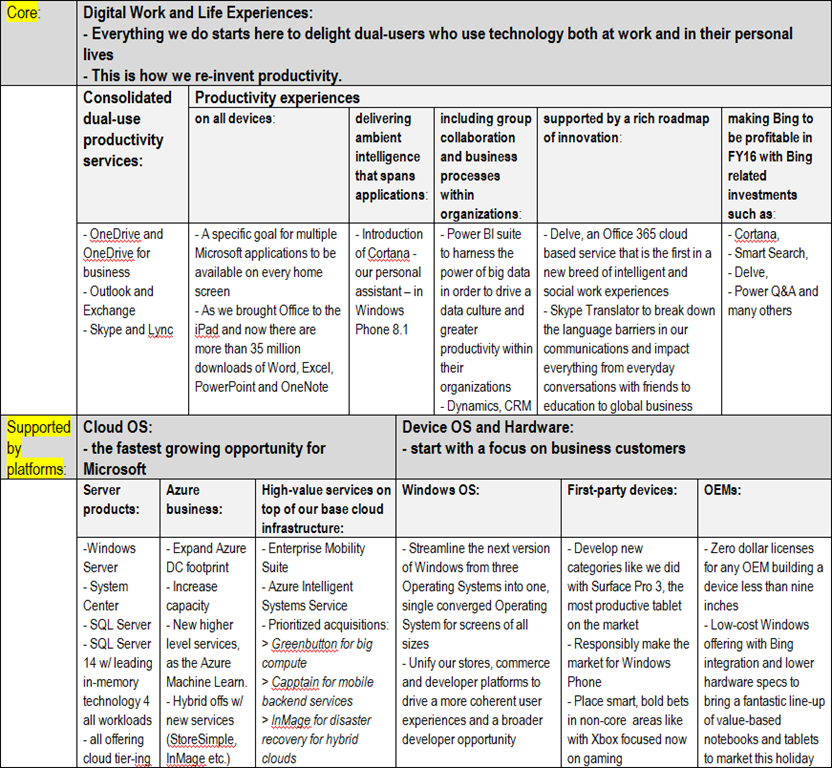

Microsoft chairman: The transition to a subscription-based cloud business isn’t fast enough. Revamp the sales force for cloud-based selling.

See also my earlier posts:

– John W. Thompson, Chairman of the Board of Microsoft: the least recognized person in the radical two-men shakeup of the uppermost leadership, ‘Experiencing the Cloud’, February 6, 2014

– Satya Nadella on “Digital Work and Life Experiences” supported by “Cloud OS” and “Device OS and Hardware” platforms–all from Microsoft, ‘Experiencing the Cloud’, July 23, 2014

May 17, 2016: John Thompson: Microsoft Should Move Faster on Cloud Plan in an interview with Bloomberg’s Emily Chang on “Bloomberg West”

The focus is very-very good right now. We’re focused on cloud, on the hydrid model of the cloud. We’re focused on the application services we can deliver not just in the cloud but on multiple devices. If ever I would like to see something change, it’s more about pace. From my days at IBM [Thompson spent 28 years at IBM before becoming chief executive at Symantec] I can remember we never seemed to be running or moving fast enough. That is always the case in the established enterprise. While you believe that you’re moving fast in fact you’re not moving as fast as a startup.

June 2, 2016: Microsoft Ramps Up Its Cloud Efforts Bloomberg Intelligence’s Mandeep Singh reports on “Bloomberg Markets”

If you look at their segment revenue 43% from Windows and hardware devices. That part is the one where it is hard to come up with a cloud strategy to really kind of migrate that segment to the cloud very quickly. The infrastructure side is 30%, that is taken care of, and the Office is the other 30% that they have a good mix. That is really the other 43% revenue they have to figure out how to accelerate that transition to the cloud.

Then Bloomberg’s June 2, 2016 article (written by Dina Bass) came out with the following verdict:

Microsoft Board Mulls Sales Force Revamp to Speed Shift to Cloud

Board members at Microsoft Corp. are grappling with a growing concern: that the company’s traditional software business, which makes up the majority of its sales, could evaporate in a matter of years — and Chairman John Thompson is pushing for a more aggressive shift into newer cloud-based products.

Thompson said he and the board are pleased with a push by Chief Executive Officer Satya Nadella to make more money from software and services delivered over the internet, but want it to move much faster. They’re considering ideas like increasing spending, overhauling the sales force and managing partnerships differently to step up the pace.

The cloud growth isn’t merely nice to have — it’s critical against the backdrop of declining demand for what’s known as on-premise software programs, the more traditional approach that involves installing software on a company’s own computers and networks. No one knows exactly how quickly sales of those legacy offerings will drop off, Thompson said, but it’s “inevitable that part of our business will be under continued pressure.”

The board members’ concern was born from experience. Thompson recounts how fellow director Chuck Noski, a former chief financial officer of AT&T, watched the telecom carrier’s traditional wireline business evaporate in just three years as the world shifted to mobile. Now, Noski and Thompson are asking whether something similar could happen to Microsoft.

“What’s the likelihood that could happen with on-prem versus cloud? That in three years, we look up and it’s gone?” Thompson said in an interview, snapping his fingers to make the point.

Small, but Growing

Nadella has said the company is on track to make its forecast for $20 billion in annualized sales from commercial cloud products in fiscal 2018. Still, Thompson said, the cloud business could be even further along, and the software maker should have started its push much earlier. Commercial cloud services revenue has posted impressive growth rates — with Azure product sales rising more than 100 percent quarterly — but the total business contributed just $5.8 billion of Microsoft’s $93.6 billion in sales in the latest fiscal year.

Thompson praised the technology behind smaller cloud products, such as Power BI tools for business analysis and data visualization and the enterprise mobile management service, which delivers apps and data to various corporate devices. But the latter, for example, brings in $300 million a year — just a sliver of overall annual revenue, which will soon top $100 billion, Thompson said.

The board is examining whether Microsoft has invested enough in its complete cloud lineup, Thompson said. It’s not just about developing better cloud technology — it’s a question of how the company sells those products and its strategy for recruiting partners to resell Microsoft’s services and build their own offerings on top of them. Persuading partners to develop compatible applications is a strong point for cloud market leader Amazon.com Inc., he said.

Thompson declined to be specific about what the company might change in sales and partnerships, but he said the company may need to “re-imagine” those organizations. “The question is, should it be more?” he said. “If you believe we need to run harder, run faster, be less risk-averse as a mantra, the question is how much more do you do.”

Cloud Partnerships

Analysts say Microsoft should seek to develop a deeper bench of partners making software for Azure and consultants to install and manage those services for customers who need the help. Microsoft is working on this, but is behind Amazon Web Services, said Lydia Leong, an analyst at Gartner Inc.

“They are nowhere near at the same level of sophistication, and the Microsoft partners are mostly new to the Azure ecosystem, so they don’t know it as well,” she said. “If you’re a customer and you want to migrate to AWS, you have this massive army that can help you.”

In the sales force, Microsoft’s representatives need more experience in cloud deals — which are generally subscription-based rather than one-time purchases — and how they differ from traditional software contracts, said Matt McIlwain, managing director at Seattle’s Madrona Venture Partners. “They haven’t made enough of a transition to a cloud-based selling motion,” he said. “It’s still a work in progress.”

Microsoft declined to comment on the company’s cloud strategy or any changes to sales and partnerships for this story, and director Noski couldn’t be reached for comment.

One-Time Purchases

The company’s dependence on demand for traditional software was painfully apparent in its most recent quarterly report, when revenue was weighed down by weakness in its transactional business, or one-time purchases of software that customers store and run on their own PCs and networks. Chief Financial Officer Amy Hood in April said that lackluster transactional sales were likely to continue.

Microsoft’s two biggest cloud businesses are the Azure web-based service, which trails top provider Amazon but leads Google and International Business Machines Corp., and the Office 365 cloud versions of e-mail, collaboration software, word-processing and spreadsheet software. Microsoft’s key on-premise products include Windows Server and traditional versions of Office and the SQL database server.

Slumps like last quarter’s hurt even more amid the company’s shift to the cloud, which has brought a lot of changes to its financial reporting. For cloud deals, revenue is recognized over the term of the deal rather than providing an up-front boost. They’re also lower-margin businesses, squeezed by the cost of building and maintaining data centers to deliver the services. Microsoft’s gross margin dropped from 80 percent in fiscal 2010 to 65 percent in the year that ended June 30, 2015.

“This business growing incredibly well, but the gross margin of that is substantially lower than their core products of the olden days,” said Anurag Rana, an analyst at Bloomberg Intelligence. “How low do they go?”

‘Different Model’ [of doing business for subscription-based software]

It’s jarring for some investors, but the other option is worse, said Thompson.

“That’s a very different model for Microsoft and one our investors are going to have to suck it up and embrace, because the alternative is don’t embrace the cloud and you wake up one day and you look just like — guess who?” Thompson doesn’t finish the sentence, but makes it clear he’s referring to IBM, the company where he spent more than 27 years, which he says is “not relevant anymore.” IBM declined to comment.

The pressure is good for Microsoft, Thompson said — pressure tends to result in change.

“You can re-imagine things when you’re stressed. It’s a lot easier to do it when you’re stressed because you feel compelled to do something,” Thompson said. “I see a lot of stress at Microsoft.”

MediaTek’s next 10 years’ strategy for devices, wearables and IoT

After what happened last year with MediaTek is repositioning itself with the new MT6732 and MT6752 SoCs for the “super-mid market” just being born, plus new wearable technologies for wPANs and IoT are added for the new premium MT6595 SoC [this same blog, March 4, 2014]. The last 10 years’ strategy was incredible!

After what happened last year with MediaTek is repositioning itself with the new MT6732 and MT6752 SoCs for the “super-mid market” just being born, plus new wearable technologies for wPANs and IoT are added for the new premium MT6595 SoC [this same blog, March 4, 2014]. The last 10 years’ strategy was incredible!

Enablement is the crucial differentiator for MediaTek’s next 10 years’ strategy, as much as it was for the one in the last 10 years. Therefore it will be presented in details below as follows:

Enablement is the crucial differentiator for MediaTek’s next 10 years’ strategy, as much as it was for the one in the last 10 years. Therefore it will be presented in details below as follows:

I. Existing Strategic Initiatives

I/1. MediaTek CorePilot™ to get foothold in the tablet market and to conquer the high-end smartphone market (![]() and

and ![]() are detailed here)

are detailed here)

I/2. MediaTek’s exclusive display technology quality enhancements

I/3. CorePilot™ 2.0 especially targeted for the extreme performance tablet and smartphone markets

II. Brand New Strategic Initiatives

II/1. CrossMount: “Whatever DLNA can plus a lot more”

II/2. LinkIt™ One Development Platform for wearables and IoT

II/3. LinkIt™ Connect 7681 development platform for WiFi enabled IoT

MWC 2015: MediaTek LinkIt Dev Platforms for Wearables & IoT – Weather Station & Smart Light Demos

MediaTek Labs technical expert Philip Handschin introduces us to two demonstrations based on LinkIt™ development platforms:

-Weather Station uses a LinkIt ONE development board to gather temperature, humidity and pressure data from several sensors. The data is then uploaded to the MediaTek Cloud Sandbox where it’s displayed in graphical form.

-Smart Light uses a LinkIt Connect 7681 development board. It receives instructions over a Wi-Fi connection, from a smartphone app, to control the color of an LED light bulb.

II/4. ![]() : The best free resources for Wearables and IoT

: The best free resources for Wearables and IoT

II/5. ![]() : to enable a new generation of world-class companies

: to enable a new generation of world-class companies

III. Stealth Strategic Initiatives (MWC 2015 timeframe)

III/1. SoCs for Android Wear and Android based wearables

Before these details let’s however understand the strategic reasoning for all that!

March 5, 2015: MediaTek CMO Johan Lodenius* at MWC 2015

* MediaTek appoints Johan Lodenius as its new Chief Marketing Officer [press release, Dec 20, 2012]

Next here is also a historical perspective (as per my blog) on MediaTek progress so far:

– First I would recommend to read the “White-box (Shanzhai) vendors” and “MediaTek as the catalyst of the white-board ecosystem” parts in the Be aware of ZTE et al. and white-box (Shanzhai) vendors: Wake up call now for Nokia, soon for Microsoft, Intel, RIM and even Apple! Feb 21, 2011 post of mine in order to understand the recipe for its last 10 years success ⇒ Johan Lodenius NOW:

“MediaTek was the pioneer of manufacturable reference design”

– Then it is worth to take a look at the following posts directly related to MediaTek if you want to understand the further evolution of the company’s formula of success :

#2 Boosting the MediaTek MT6575 success story with the MT6577 announcement — UPDATED with MT6588/83 coming in Q4 2012 and 8-core MT6599 in 2013 (The MT6588 was later renamed MT6589). On the chart below of the “Global market share held by leading smartphone vendors Q4’09-Q4’14” by Statista it is quite well visible the effect of MT6575 (see from Q3’12 on) as this enabled a huge number of 3d Tier or no-name companies, predominantly from China to enter the smartphone market quickly and extremely competitively (and Nokia’s new strategy to fail as well)

#6 MT6577-based JiaYu G3 with IPS Gorilla glass 2 sreen of 4.5” etc. for $154 (factory direct) in China and $183 internationally (via LightTake)

#6 MT6577-based JiaYu G3 with IPS Gorilla glass 2 sreen of 4.5” etc. for $154 (factory direct) in China and $183 internationally (via LightTake)

#13 MediaTek’s ‘smart-feature phone’ effort with likely Nokia tie-up

#16 UPDATE Aug’13: Xiaomi $130 Hongmi superphone END MediaTek MT6589 quad-core Cortex-A7 SoC with HSPA+ and TD-SCDMA is available for Android smartphones and tablets of Q1 delivery

#24 Eight-core MT6592 for superphones and big.LITTLE MT8135 for tablets implemented in 28nm HKMG are coming from MediaTek to further disrupt the operations of Qualcomm and Samsung

#42 MediaTek MT6592-based True Octa-core superphones are on the market to beat Qualcomm Snapdragon 800-based ones UPDATE: from $147+ in Q1 and $132+ in Q2

#67 MediaTek is repositioning itself with the new MT6732 and MT6752 SoCs for the “super-mid market” just being born, plus new wearable technologies for wPANs and IoT are added for the new premium MT6595 SoC

#93 Phablet competition in India: $258 Micromax-MediaTek-2013 against $360 Samsung-Broadcom-2012

#128 MediaTek’s 64-bit ARM Cortex-A53 octa-core SoC MT8752 is launched with 4G/LTE tablets in China

#205 Now in China and coming to India: 4G LTE True Octa-core™premium superphones based on 32-bit MediaTek MT6595 SoC with upto 20% more performance, and upto 20% less power consumption via its CorePilot™ technology

#237 Micromax is in a strategic alliance with operator Aircel and SoC vendor MediaTek for delivery of bundled complete solution offers almost equivalent to cost of the device and providing innovative user experience

#281 ARM Cortex-A17, MediaTek MT6595 (devices: H2’CY14), 50 billion ARM powered chips

As an alternative I can recommend here the February 2, 2015 presentation by Grant Kuo, Managing Director, MediaTek India on the IESA Vision Summit 2015 event in India:

| – MediaTek Journey Since 1997 → Accent on the turnkey handset solution: 200 eng. ⇒ 30-40 eng., time-to-market down to 4 months → Resulting in MediaTek share with local brands in India of 70% by 2014 |

– The Next Big Business after Mobile – Partnering for New Business Opportunity – Innovations & Democratization → Everyday Genius and Super-Mid Market |

I. Existing Strategic Initiatives

I/1. MediaTek CorePilot™ to get foothold in the tablet market and to conquer the high-end smartphone market

( and

and  are detailed here)

are detailed here)

July 15, 2013:

Technology Spotlight: Making the big.LITTLE difference

No matter where the mobile world takes us, MediaTek is always at the forefront, ensuring that the latest technologies from our partners are optimized for every mobile eventuality.

No matter where the mobile world takes us, MediaTek is always at the forefront, ensuring that the latest technologies from our partners are optimized for every mobile eventuality.

To maximize the performance and energy efficiency benefits of the ARM big.LITTLE™ architecture, MediaTek has delivered the world’s first mobile system-on-a-chip (SoC) – the MT8135 – with Heterogeneous Multi-Processing (HMP), featuring MediaTek’s CorePilot™ technology.

ARM big.LITTLE™ is the pairing of two high performance CPUs, with two power efficient CPUs, on a single SoC. MediaTek’s CorePilot™ technology uses HMP to dynamically assign software tasks to the most appropriate CPU or combination of CPUs according to the task workload, therefore maximizing the device’s performance and power efficiency.

In our recently produced whitepaper, we discussed the advantages of HMP over alternative forms of big.LITTLE™ architecture, and noted that while – HMP overcomes the limitations of other big.LITTLE™ architectures, MediaTek’s CorePilot™ maximizes the performance and power-saving potential of HMP with interactive power management, adaptive thermal management and advanced scheduler algorithms.

To learn more, please download MediaTek’s CorePilot™ whitepaper.

Leader in HMP

As a founding member of the Heterogeneous System Architecture (HSA) Foundation, MediaTek actively shapes the future of heterogeneous computing.

HSA Foundation is a not-for-profit consortium of SoC and software vendors, OEMs and academia.

July 15, 2013:

MediaTek CorePilot™ Heterogeneous Multi-Processing Technology [whitepaper]

Delivering extreme compute performance with maximum power efficiency

In July 2013, MediaTek delivered the industry’s first mobile system on a chip with Heterogeneous Multi-Processing. The MT8135 chipset for Android tablets features CorePilot technology that maximizes performance and power saving with interactive power management, adaptive thermal management and advanced scheduler algorithms.

Table of Contents

- ARM big.LITTLE Architecture

- big.LITTLE Implementation Models

- Cluster Migration

- CPU Migration

- Heterogeneous Multi-Processing

- MediaTek CorePilot Heterogeneous Multi-Processing Technology

- Interactive Power Management

- Adaptive Thermal Management

- Scheduler Algorithms

- The MediaTek HMP Scheduler

- The RT Scheduler

- Task Scheduling & Performance

- CPU-Intensive Benchmarks

- Web Browsing

- Task Scheduling & Power Efficiency

- SUMMARY

Oct 29, 2013:

CorePilot Task Scheduling & Performance

Mobile SoCs have a limited power consumption budget.

- With ARM big.LITTLE, SoC platforms are capable of asymmetric computing where by tasks can be allocated to CPU cores in line with their processing needs.

- From the three available software models for configuring big.LITTLE SoC platforms, Heterogeneous Multi-Processing offers the best performance.

- MediaTek CorePilot technology is designed to deliver the maximum compute performance from big.LITTLE mobile SoC platforms with low power consumption.

- The MediaTek CorePilot MT8135 chipset for Android is the industry’s first Heterogeneous Multi-Processing implementation.

- MediaTek leads in the heterogeneous computing space and will release further CorePilot innovations in 2014.

(*) The #1 market position in Digital TVs is the result of the acquisition of the MStar Semiconductor in 2012.

(*) The #1 market position in Digital TVs is the result of the acquisition of the MStar Semiconductor in 2012.

Feb 9, 2015 by Bidness Etc:

Qualcomm Inc. (QCOM) To Lose Market Share: UBS

UBS expects Mediatek to gain at Qualcomm’s expense this year

…

Eric Chen, Sunny Lin, and Samson Hung, analysts at UBS, suggest that Mediatek will control 46% of the 4G smartphone market in China in 2015. Last year the company had a 30% market share. Low-end customers, as well as high-end clients that are switching to Mediatek MT6795 from Snapdragon 800 will help Mediatek advance.“We believe clients for MT6795 include Sony, LGE, HTC, Xiaomi, Oppo, Meizu, TCL, and Lenovo, among others. With its design win of over 10 models, we anticipate Mediatek will ship 2m units per month in Q215 and 4m units per month in H215. That indicates revenue will reach 14% in Q215 and 21% in H215, up from 3% in Q115,” reads the report.UBS also said that Mediatek will witness a 20% increase in its revenue and report 48% in gross margin in 2015. Earnings are forecasted to grow 21%.

“We forecast MT6752/MT6732 shipments (mainly at MT6752 of US$18-20) to reach 3m units per month in Q215, up from 1.5m units per month in Q115,” read the report*.

…

My insert here the Sony Xperia E4g [based on MT6732] – Well-priced LTE-Smartphone Hands On at MWC 2015 by Mobilegeeks.de:

* From another excerpt of the UBS report: “In the mid-end, UBS believes China’s largest smartphone manufacturers from LenovoGroup (992.HK) to Huawei will switch from Qualcomm’s MSM 8939 [Snapdragon 615] to Mediatek’s MT 6752 because of Qualcomm’s “inferior design.” ”Further below in the section I/2. there will be an image enhancement demonstration with an MT6752-based Lenovo A936 smartphone, actually a typical “lower end super-mid” device sold in China since December just for ¥998 ($160) — for 33% lower price than the mid-point for the super-mid market indicated a year ago by MediaTek (see the very first image in this post).

July 15, 2014 by Mobilegeeks.de:

MediaTek 64-bit LTE Octa-Core Smartphone Reference Design “In Shezhen MediaTek showed off their new Smartphone Reference Design for their LTE Octa-Core line up. The MT6595 and MT6795 are both high end processors capable of taking on Qualcomm in terms of benchmarks at a budget price.”

July 15, 2014 by Mobilegeeks.de:

MediaTek: How They Came To Take on Qualcomm?

Brief content: When I found out about the MediaTek press conference, I immediately picked up a ticket and planned to spend a few days in one of my favorite cities on earth, Shenzhen. MediaTek is one of the fastet growing companies in mobile. But where exactly did this Taiwanese company come from?

Back in 1997 MediaTek was spun off from United Micro Electronics Corporation which was Taiwan’s first semi conductor company back in 1980 and started out making chipsets for home entertainment centers and optical drives. In 2004 they entered the mobile phone market with a different approach, instead of just selling SoCs they sold complete packages, a chip with an operating system all ready baked on, they were selling reference designs.

This cut entire heavily manned teams out of the process and more importantly reduced barriers for entry, small companies sold phones under their own brand. This is why most people have never heard of MediaTek, they merely enabled the success of others. Particularly in emerging markets like China.

With Feature phones peaking in 2012 and Smartphones finally taking over the top spot in 2013, they had to move on, it took them longer then they should have, regardless, they are here now. MediaTek is applying the same strategy for dominating the Feature Phone market to low and mid range Smartphones. They are already in bed with all the significant emerging market players like ZTE, Huawei & Alcatel. And getting manufacturers on your side works for gaining market share when carriers don’t have much control. Unsubsidized handsets make people purchase more affordable devices.

Despite its enormous success in the category where the next Billion handsets are going to be sold, they have yet to make a name for themselves in the West. Fair enough, being known for cheap handsets will create challengers to entering the high end market. But that hasn’t stopped them from coming out with the world first 4G LTE Octa core processor. And they even set up shop in Qualcomm’s backyard by opening an office in San Deigo. Which is a pretty big statement, especially when you take into consideration that MediaTek is bigger than Broadcom & Nvidia.

But as they push into the US, Qualcomm seeks to gain a foothold in China. So let’s take a closer look at that because this race has less to do with SoC’s than it does with LTE. MediaTek’s Octa-core processor with LTE put Qualcomm on alert because they always had to lead when it came to LTE. I found some stats on Android Authority from Strategy Analytics in Q3 2013 66% of their cellular revenue came from LTE, which MediaTek claimed second place at 12%, and Intel in third with 7%. Qualcomm even has a realtionship with China Mobile to get their LTE devices into the hands of its local market.

Even still, it is a numbers game and if MediaTek’s SoC performance is at the same level as Qualcomm’s mid range SoC offering but at a lower price, it won’t take MediaTek to catch up. But even on a more base level, let me tell you about a meeting that I had in Shenzhen with Gionee I asked about developing on Qualcomm vs MediaTek. They said, MediaTek will get back to you within the hour, Qualcomm will get back to you the next day, and when I mentioned Intel they just laughed.

Consumers might find it frustrating that MediaTek takes a little longer to come out with the latest version of Android, but the reason is that they are doing all the work for their partners. When you’re competing with a company that understand customer service better than anyone else right now, it’s going to be hard not to see them as a real threat.

MediaTek Introduces Industry Leading Tablet SoC, MT8135

TAIWAN, Hsinchu – July 29, 2013 – MediaTek Inc., (2454: TT), a leading fabless semiconductor company for wireless communications and digital multimedia solutions, today announced its breakthrough MT8135 system-on-chip (SoC) for high-end tablets. The quad-core solution incorporates two high-performance ARM Cortex™-A15 and two ultra-efficient ARM Cortex™-A7 processors, and the latest GPU from Imagination Technologies, the PowerVR™ Series6. Complemented by a highly optimized ARM® big.LITTLE™ processing subsystem that allows for heterogeneous multi-processing, the resulting solution is primed to deliver premium user experiences. This includes the ability to seamlessly engage in a range of processor-intensive applications, including heavy web-downloading, hardcore gaming, high-quality video viewing and rigorous multitasking – all while maintaining the utmost power efficiency.

In line with its reputation for creating innovative, market-leading platform solutions, MediaTek has deployed an advanced scheduler algorithm, combined with adaptive thermal and interactive power management to maximize the performance and energy efficiency benefits of the ARM big.LITTLE™ architecture. This technology enables application software to access all of the processors in the big.LITTLE cluster simultaneously for a true heterogeneous experience. As the first company to enable heterogeneous multi-processing on a mobile SoC, MediaTek has uniquely positioned the MT8135 to support the next generation of tablet and mobile device designs.

“ARM big.LITTLE™ technology reduces processor energy consumption by up to 70 percent on common workloads, which is critical in the drive towards all-day battery life for mobile platforms,” said Noel Hurley, vice president, Strategy and Marketing, Processor Division, ARM. “We are pleased to see MediaTek’s MT8135 seizing on the opportunity offered by the big.LITTLE architecture to enable new services on a heterogeneous processing platform.”

“The move towards multi-tasking devices requires increased performance while creating greater power efficiency that can only be achieved through an optimized multi-core system approach. This means that multi-core processing capability is fast becoming a vital feature of mobile SoC solutions. The MT8135 is the first implementation of ARM’s big.LITTLE architecture to offer simultaneous heterogeneous multi-processing. As such, MediaTek is taking the lead to improve battery life in next-generation tablet and mobile device designs by providing more flexibility to match tasks with the right-size core for better computational, graphical and multimedia performance,” said Mike Demler, Senior Analyst with The Linley Group.

The MT8135 features a MediaTek-developed four-in-one connectivity combination that includes Wi-Fi, Bluetooth 4.0, GPS and FM, designed to bring highly integrated wireless technologies and expanded functionality to market-leading multimedia tablets. The MT8135 also supports Wi-Fi certified Miracast™ which makes multimedia content sharing between devices remarkably easier.

In addition, the tablet SoC boasts unprecedented graphics performance enabled by its PowerVR™ Series6 GPU from Imagination Technologies. “We are proud to have partnered with MediaTek on their latest generation of tablet SoCs” says Tony King-Smith, EVP of marketing, Imagination. “PowerVR™ Series6 GPUs build on Imagination’s success in mobile and embedded markets to deliver the industry’s highest performance and efficient solutions for graphics-and-compute GPUs. MediaTek is a key lead partner for Imagination and its PowerVR™ Series6 GPU cores, so we expect the MT8135 to set an important benchmark for high-end gaming, smooth UIs and advanced browser-based graphics-rich applications in smartphones, tablets and other mobile devices. Thanks to our PowerVR™ Series6 GPU, we believe the MT8135 will deliver five-times or more the GPU-compute-performance of the previous generation of tablet processors.”

“At MediaTek, our goal is to enable each user to take maximum advantage of his or her mobile device. The implementation and availability of the MT8135 brings an enjoyable multitasking experience to life without requiring users to sacrifice on quality or energy. As the leader in multi-core processing solutions, we are constantly optimizing these capabilities to bring them into the mainstream, so as to make them accessible to every user around the world,” said Joe Chen, GM of the Home Entertainment Business Unit at MediaTek.

The MT8135 is the latest SoC in MediaTek’s highly successful line of quad-core processors, which since its launch last December* has given rise to more than 350 projects and over 150 mobile device models across the world. This latest solution, along with its comprehensive accompanying Reference Design, will like their predecessors fast become industry standards, particularly in the high-end tablet space.

* MediaTek Strengthens Global Position with World’s First Quad-Core Cortex-A7 System on a Chip – MT6589 [press release, Dec 12, 2012]

See also: Imagination Welcomes MediaTek’s Innovation in True Heterogeneous Multi-Processing With New SoC Featuring PowerVR Series6 GPU [press release, Aug 28, 2013]

MediaTek Announces MT6595, World’s First 4G LTE Octa-Core Smartphone SOC with ARM Cortex-A17 and Ultra HD H.265 Codec Support

MediaTek Announces MT6595, World’s First 4G LTE Octa-Core Smartphone SOC with ARM Cortex-A17 and Ultra HD H.265 Codec Support

MediaTek CorePilot™ Heterogeneous Multi-Processing Technology enables outstanding performance with leading energy efficiency

TAIWAN, Hsinchu – 11 February, 2014 – MediaTek today announces the MT6595, a premium mobile solution with the world’s first 4G LTE octa-core smartphone SOC powered by the latest Cortex-A17™ CPUs from ARM®.

The MT6595 employs ARM’s big.LITTLE™ architecture with MediaTek’s CorePilot™ technology to deliver a Heterogeneous Multi-Processing (HMP) platform that unlocks the full power of all eight cores. An advanced scheduler algorithm with adaptive thermal and interactive power management delivers superior multi-tasking performance and excellent sustained performance-per-watt for a premium mobile experience.

Excellent Performance-Per-Watt

- Four ARM Cortex-A17™, each with significant performance improvement over previous-generation processors, plus four Cortex-A7™ CPUs

- ARM big.LITTLE™ architecture with full-system coherency performs sophisticated tasks efficiently

- Integrated Imagination Technologies PowerVR™ Series6 GPU for high-performance graphics

- Integrated 4G LTE Multi-Mode Modem

- Rel. 9, Category 4 FDD and TDD LTE with data rates up to 150Mbits/s downlink and 50Mbits/s uplink

- DC-HSPA+ (42Mbits/s), TD-SCDMA and EDGE for legacy 2G/3G networks

- 30+ 3GPP RF bands support to meet operator needs worldwide

World-Class Multimedia Subsystems

- World’s first mobile SOC with integrated, low-power hardware support for the new H.265 Ultra HD (4K2K) video record & playback, in addition to Ultra HD video playback support for H.264 & VP9

- Supports 24-bit 192 kHz Hi-Fi quality audio codec with high performance digital-to-analogue converter (DAC) to head phone >110dB SNR

- 20MP camera capability and a high-definition WQXGA (2560 x 1600) display controller

- MediaTek ClearMotion™ technology eliminates motion jitter and ensures smooth video playback at 60fps on mobile devices

- MediaTek MiraVision™ technology for DTV-grade picture quality

First MediaTek Mobile Platform Supporting 802.11ac

- Comprehensive complementary connectivity solution that supports 802.11ac

- Multi-GNSS positioning systems including GPS, GLONASS, Beidou, Galileo and QZSS

- Bluetooth LE and ANT+ for ultra-low power connectivity with fitness tracking devices

World’s First Multimode Wireless Charging Receiver IC

- Multi-standard inductive and resonant wireless charging functionality available

- Supported by MediaTek’s companion multimode wireless power receiver IC

“MediaTek is focused on delivering a full-range of 4G LTE platforms and the MT6595 will enable our customers to deliver premium products with advanced features to a growing market,” said Jeffrey Ju, General Manager of the MediaTek Smartphone Business Unit.

“Congratulations to MediaTek on being in a leading position to implement the new ARM Cortex-A17 processor in mobile device”, said Noel Hurley, Vice President and Deputy General Manager, ARM Product Division. “MediaTek has a keen understanding of the smartphone market and continues to identify innovative ways to bring a premium mobile experience to the masses.”

The MT6595 platform will be commercially available by the first half of 2014, with devices expected in the second half of the year.

Sept 21, 2014:

MediaTek CorePilot™ Technology

MediaTek Launches 64-bit True Octa-core™ LTE Smartphone SoC with World’s First 2K Display Support

MediaTek Launches 64-bit True Octa-core™ LTE Smartphone SoC with World’s First 2K Display Support

TAIWAN, Hsinchu – July 15, 2014 – MediaTek today announced MT6795, the 64-bit True Octa-core™ LTE smartphone System on Chip (SoC) with the world’s first 2K display support. This is MediaTek’s flagship smartphone SoC designed to empower high-end device makers to leap into the Android™ 64-bit era.

The MT6795 is currently set to be the first 64-bit, LTE, True Octa-core SoC targeting the premium segment, with speed of up to 2.2GHz, to hit the market. The SoC features MediaTek’s CorePilot™ technology providing world-class multi-processor performance and thermal control, as well as dual-channel LPDDR3 clocked at 933MHz for top-end memory bandwidth in a smartphone.

The high-performance SoC also satisfies the multimedia requirements of even the most demanding users, featuring multimedia subsystems that support many technologies never before possible or seen in a smartphone, including support for 120Hz displays and the capability to create and playback 480 frames per second (fps) 1080p Full HD Super-Slow Motion videos.

With the launch of MT6795, MediaTek is accelerating the global transition to LTE and creating opportunities for device makers to gain first-mover advantage with top-of-the-line devices in the 64-bit Android device market. Coupled with 4G LTE support, MT6795 completes MediaTek’s 64-bit LTE SoC product portfolio: MT6795 for power users, MT6752 for mainstream users and MT6732 for entry level users. This extensive portfolio allows everyone to embrace the improved speed from 4G LTE and parallel computing capability from CorePilot and 64-bit processors.

Key features of MT6795:

- 64-bit True Octa-core LTE SoC* with clock speed up to 2.2GHz

- MediaTek CorePilot unlocks the full power of all eight cores

- Dual-channel LPDDR3 memory clocked at 933MHz

- 2K on device display (2560×1600)

- 120Hz mobile display with Response Time Enhancement Technology and MediaTek ClearMotion™

- 480fps 1080p Full HD Super-Slow Motion video feature

- Integrated, low-power hardware support for H.265 Ultra HD (4K2K) video record & playback, Ultra HD video playback support for H.264 & VP9, as well as for graphics-intensive games and apps

- Support for Rel. 9, Category 4 FDD and TDD LTE (150Mbps/50Mbps), as well as modems for 2G/3G networks

- Support for Wi-Fi 802.11ac/Bluetooth®/FM/GPS/Glonass/Beidou/ANT+

- Multi-mode wireless charging supported by MediaTek’s companion multi-mode wireless power receiver IC

“MediaTek has once again demonstrated leading engineering capabilities by delivering breakthrough technology and time-to-market advantage that enable limitless opportunities for our partners and end users, while setting the bar even higher for our competition,” said Jeffrey Ju, General Manager of the MediaTek Smartphone Business Unit. “With a complete and inclusive 64-bit LTE SoC product portfolio, we are firmly on track to lead the industry in delivering premium mobile user experiences for years to come.”

MT6795-powered devices will be commercially available by the end of 2014.

* Instead of a heterogeneous multi-processing architecture, the MT6795 features eight identical Cortex-A53 cores. 4xA53 + 4xA57 big.LITTLE was unofficially before.

See also: The Cortex-A53 as the Cortex-A7 replacement core is succeeding as a sweet-spot IP for various 64-bit high-volume market SoCs to be delivered from H2 CY14 on [this same blog, Dec 23, 2013]

MediaTek Launches MT6735 – Mainstream WorldMode™ Smartphone Platform

By adding CDMA2000, MediaTek accomplishes WorldMode modem capability in a single platform and meets wireless operator requirements globally; putting advanced yet affordable smartphones into the hands of consumers

TAIWAN, Hsinchu – 15 October, 2014 – MediaTek today announced a new 64-bit mobile system on chip (SoC), MT6735, incorporating the modem and RF needs of wireless operators globally. By offering a unified mobile platform, MediaTek is enabling its customers to develop on the MT6735 and sell mobile devices globally, thereby creating significant R&D cost savings and economies of scale in manufacturing. The MT6735 builds upon MediaTek’s existing line-up of mainstream LTE platforms by adding the critical WorldMode modem capability.

The MT6735 incorporates four 64-bit ARM® Cortex®-A53 processors, delivering significantly higher performance than Cortex-A7 for a premium mobile computing experience, driving greater choice of smart devices at affordable prices for consumers. As projected, MediaTek sees a continued consolidation of smartphones into a very large mid-range, termed by MediaTek as the “Super-mid market”.

“The MT6735 is a breakthrough product from MediaTek,” said Jeffrey Ju, SVP and General Manager of Wireless Communication at MediaTek. “With CDMA2000, we offer global reach, driving high performance technology into the hands of users everywhere. We also strongly believe that as LTE becomes mainstream in all markets, the processing power must be consistently high to ensure the best possible user experience. That’s why 64-bit CPUs and CorePilotTM technology are standard features across all of our LTE solutions.”

“MediaTek wants to make the world a more inclusive place, where the best, fully-connected user experiences do not mean expensive,” said Johan Lodenius, Chief Marketing Officer for MediaTek. “We are committed to creating powerful devices that accelerate the transformation of the global market and strive to put high-quality technology in the hands of everyone.”

The MT6735 platform includes:

Next-Generation 64-bit Mobile Computing System

- Quad-core, up to 1.5GHz ARM Cortex-A53 64-bit processors with MediaTek’s leading CorePilot multi-processor control system, providing a performance boost for mainstream mobile devices

- Mali-T720 GPU with support for the Open GL ES 3.0 and Open CL 1.2 APIs and premium graphics for gaming and UI effects

Advanced Multimedia Features

- Supports low-power, 1080p, 30fps video playback on the emerging video codec standard H.265 and legacy H.264 and 1080p, 30fps H.264 video recording

- Integrated 13MP camera image signal processor with support for unique features like PIP (Picture-in-Picture), VIV (Video in Video) and Video Face Beautifier

- Display support up to HD 1280×720 resolution with MediaTek MiraVision™ technology for DTV-grade picture quality

Integrated 4G LTE WorldModeModem & RF

- Rel. 9, Category 4 FDD and TDD LTE (150 Mb/s downlink, 50 Mb/s uplink)

- 3GPP Rel. 8, DC-HSPA+ (42 Mb/s downlink, 11 Mb/s uplink), TD-SCDMA and EDGE are supported for legacy 2G/3G networks

- CDMA2000 1x/EVDO Rev. A

- Comprehensive RF support (B1 to B41) and the ability to mix multiple low-, mid-, and high bands for a global roaming solution

Integrated Connectivity Solutions

- Supports dual-band Wi-Fi to effortlessly connect to a wide array of wireless routers and enable new applications like video sharing over Miracast

- Bluetooth 4.0, supporting low-power connection to fitness gadgets, wearables and other accessories, such as Bluetooth headsets

MT6735 is sampling to early customers in Q4, 2014, with the first commercial devices to be available in Q2, 2015.

March 4, 2015:

LTE WorldMode with MediaTek MT6735 and MT6753, now ready for the USA market and worldwide!

ARMdevices.net (Charbax): MediaTek now supports worldwide LTE with the 64bit MT6735 quad-core ARM Cortex-A53 and the 64bit MT6753 octa-core ARM Cortex-A53. This is LTE Cat4, they claim to be 10-15% faster in single SIM mode and 20-30% faster with dual-sim support compared to perhaps a Qualcomm LTE Worldmode. They also claim to use less power than competitor for standby, 3G and LTE mode. Many LTE Telcos around the world have already certified support for these new MediaTek LTE parts, with support on Vodafone, Orange, T-Mobile, Three, Deutsche Telekom, China Unicom, China Mobile, Telefonica, Verizon and AT&T. This LTE Worldmode processor is a big deal for MediaTek as it opens up the USA market for them, where MediaTek previously didn’t have much support for American telecoms as MediaTek’s previous 3G and LTE solutions mainly was working outside of the USA.

MediaTek Releases the MT6753: A WorldMode 64-bit Octa-core Smartphone SoC

MediaTek Releases the MT6753: A WorldMode 64-bit Octa-core Smartphone SoC

Complete with integrated CDMA2000 technology that looks to meet the needs of the high-end smartphone market worldwide

SPAIN, Barcelona – March 1, 2015 – MediaTek, today announced the release of the MT6753, a 64-bit Octa-core mobile system-on-chip (SoC) with support for WorldMode modem capability. Coupled with the previously announced MT6735 quad-core SoC, the new MT6753 is designed with high performance features for an ever more demanding mid-range market.

Reinforcing MediaTek’s commitment to driving the latest technology to customers across the world, the MT6753 SoC will be offered at a price that creates strong value for customers, especially as it comes with integrated CDMA2000 to ensure compatibility in every market. The eight ARM Cortex-A53 64-bit processors and Mali-T720 GPU helps to ensure customers can meet graphic-heavy multimedia requirements while also maintaining battery efficiency for high-end devices.

“The launch of the MT6753 again demonstrates MediaTek’s desire to offer more power and choice to our 4G LTE product line, while also giving customers worldwide greater diversity and flexibility in their product layouts”, said Jeffrey Ju, Senior Vice President at MediaTek.

The MT6753, which is compatible with the previously announced MT6735 for entry smartphones, also enables handset makers to reduce time to market, simplify product development and manage product differentiation in a more cost effective way. MT6753 is sampling to customers now, with the first commercial devices to be available in Q2, 2015.

Key Features of MT6753 include:

Next-Generation 64-bit Mobile Computing System

- Octa-core, up to 1.5GHz ARM Cortex-A53 64-bit processors with MediaTek’s leading CorePilot multi-processor technology, providing a perfect balance of performance and power for mainstream mobile devices

- Mali-T720 GPU with support for the Open GL ES 3.0 and Open CL 1.2 APIs and premium graphics for gaming and UI effects

Advanced Multimedia Features

- Supports low-power, 1080p, 30fps video playback on the emerging video codec standard H.265 and legacy H.264 and 1080p, 30fps H.264 video recording

- Integrated 16MP camera image signal processor with support for unique features like PIP (Picture-in-Picture), VIV (Video in Video) and Video Face Beautifier

- Display support up to HD 1920×1080 60fps resolution with MediaTek MiraVision™ technology for DTV-grade picture quality

Integrated 4G LTE WorldModeModem & RF

- Rel. 9, Category 4 FDD and TDD LTE (150 Mb/s downlink, 50 Mb/s uplink)

- 3GPP Rel. 8, DC-HSPA+ (42 Mb/s downlink, 11 Mb/s uplink), TD-SCDMA and EDGE are supported for legacy 2G/3G networks

- CDMA2000 1x/EVDO Rev. A

- Comprehensive RF support (B1 to B41) and the ability to mix multiple low-, mid-, and high bands for a global roaming solution

Integrated Connectivity Solutions

- Supports dual-band Wi-Fi to effortlessly connect to a wide array of wireless routers and enable new applications like video sharing over Miracast

- Bluetooth 4.0, supporting low-power connection to fitness gadgets, wearables and other accessories, such as Bluetooth headsets

January 2015: MediaTek leaked smartphone roadmap (note the MT67xx scheduled for 4Q 2015 and using 20nm technology, as well that the new smartphone SoCs, except the very entry 3G with Cortex-A7, are based on Cortex-A53 cores while still using 28nm)

See also: The Cortex-A53 as the Cortex-A7 replacement core is succeeding as a sweet-spot IP for various 64-bit high-volume market SoCs to be delivered from H2 CY14 on [this same blog, Dec 23, 2013]

The availability dates shown above are for the first commercial devices!

MediaTek rebranding the high-end smartphone SoC family into  (starting with the MT6795 now denominated as Helio X10), after the Greek word for sun, “helios”:

(starting with the MT6795 now denominated as Helio X10), after the Greek word for sun, “helios”:

March 12, 2015:

MediaTek Helio explained by CMO Johan Lodenius at MWC 2015

March 5, 2015:

MediaTek SVP Jeffrey Ju introducing the new Helio branding for premium (P) and extreme (X) performance segments of the smartphone SoCs at MWC 2015

I/2. Then continues with the presentation of MediaTek’s exclusive display technology quality enhancements (click on the links to watch the related brief videos):

– MiraVision picture quality enhancement

– SmartScreen as “the best viewing experience across extreme lighting conditions”

– 120Hz LCD display technology for a whole new experience (vs. the current 60Hz used by everyone)

– Super-SlowMotion, meaning 1/16 speed 480fps video playback (world’s first)

– Instant Focus, meaning phase-detection autofocus (PDAF) technology on mobile devices cameras

– preliminary information on the new high-end Helio SoC with the new Cortex-A72 relying on 20nm technology, MiraVision Plus and their 3d genaration modem in 2nd half of 2015 (so it is quite likely the MT67xx mentioned in the above roadmap)

Note that 3d party companies are providing additional imaging enhancements to the device manufacturers, like the ones demonstrated by ArcSoft for the Lenovo Golden Warrior Note 8 TD-LTE (A936) at MWC 2015. Designed with Chinese (TD) and global market capabilities in mind, and available there from Dec’14 for ¥998 ($160) it is based on the MT6752 octacore which is providing the ARM Mali™-T760 GPU. ArcSoft is exploiting the GPU Compute capabilities for the additional imaging features shown in the video below:

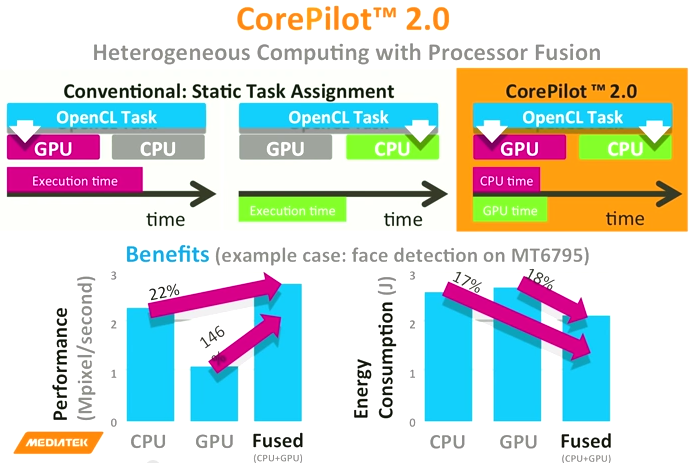

I/3. CorePilot™ 2.0 especially targeted for the extreme performance tablet and smartphone markets

Revolutionary new 64-bit ARM processor ramps up tablet performance and battery life for heavy content Android users

SPAIN, Barcelona – March 1, 2015 – MediaTek today announced the first tablet system-on-chip (SoC) in a family that features an ARM® Cortex®-A72 processor, the industry’s highest-performing mobile CPU. The Quad-core MT8173 is designed to maximize the benefits of the new processor and greatly increase tablet performance, while extending battery life to ensure a premium tablet experience. The MT8173 meets the growing demand for 4K Ultra HD content and graphic-heavy gaming by everyday mobile computing device users.

The MT8173 is designed with a 64-bit Multi-core big.LITTLE architecture that combines two Cortex-A72 CPUs and two Cortex-A53 CPUs, extending performance and power efficiency further. MT8173 boasts a six-fold increase in performance compared to the MT8125 released in 2013. MT8173 offers up to 2.4GHz performance, supporting OpenCL with the deployment of MediaTek Corepilot® 2.0, and enables heterogeneous computing between the CPU and GPU. The SoC also ensures the ultimate in display clarity and motion fluency on 120Hz display, promising smooth scrolling with crystal clarity as compared to a normal 60Hz display.

“MT8173 highlights the significant shift in how mobile devices, such as Android tablets, are used and, with the combination of ARM’s latest technology, we are delivering a platform that answers the growing demand for improved mobile multimedia performance and power usage. By presenting CPU specs that outperform any other device currently on the market, we are bringing PC-like performance to tablet form factor, reinforcing MediaTek’s continued commitment to deliver premium technology to everyone across the globe.” said Joe Chen, Senior Vice President of MediaTek.

“MediaTek has been a strong adopter of ARM big.LITTLE processing architecture, extending it with CorePilot, to deliver extreme performance, while maintaining power efficiency,” said Noel Hurley, General Manager, CPU group, ARM. “Decisively and quickly incorporating the second-generation of our 64-bit technology into a market-ready product, underscores the partnership between ARM and MediaTek.”

The MT8173 platform features:

True Heterogeneous 64-bit Multi-Core big.LITTLE architecture up to 2.4GHz

- Features ARM Cortex®-A72 and ARM Cortex®-A53 64-bit CPU

- Big cores and LITTLE cores can run at full speed at the same time for peak performance requirement

- Performance of up to 2.4GHz

Imagination PowerVR GX6250 GPU

- Supports OpenGL ES 3.1, OpenCL for future applications

- Delivers 350Mtri/s and 2.8 Gpix/s performance

- Provides uncompromised user experience for WQXGA display at 60fps

Comprehensive Multimedia Features

- 120Hz mobile display

- Ultra HD 30fps H.264/HEVC(10-bit)/VP9 hardware video playback

- WQXGA display support with TV-grade picture quality enhancement

- HDMI and Miracast support for multi-screen applications

- 20MP camera ISP with video face beautify and LOMO effects

Security hardware accelerator

- Supports Widevine Level 1, Miracast with HDCP

- HDCP 2.2 for premium video to 4k TV display

MT8173 is available for customers now, and will be featured in the first commercial tablets in second half of this year. MT8173 is being demonstrated at 2015 Mobile World Congress in Barcelona, Spain at MediaTek’s booth – Hall 6, Stand 6E21

March 5, 2015:

MediaTek CTO Kevin Jou on CorePilot 2.0 at MWC 2015

Then continues with the presentation of the new:

– MT8173 with the world’s first Cortex-A72 in the “big” role

– worldmode modem technology with LTE category 6

– CrossMount technology as the “not yet another DLNA solution, as it does whatever DLNA can plus a lot more“

II. Brand New Strategic Initiatives

Feb 27, 2015:

II/1. CrossMount

Unite your devices: Open up new possibilities

Technology makes it easy to share the things we love, but only when we use it in a certain way.

Making a video call just needs a smartphone with a camera, for instance, but what if you want to talk using the big screen on your HDTV? And what happens when you want to watch video from your set-top IPTV box on your tablet when you’re lying in bed — and use your smartwatch as a remote control?

With technology playing an increasingly important part in our lives, these are the kind of problems we can expect to face every day. And they’re the kind of problems MediaTek solves with CrossMount.

MediaTek CrossMount is a new standard for sharing hardware and software resources between a whole host of consumer electronics.

Based on the UPnP protocol, CrossMount connects compatible devices wirelessly, using either a home Wi-Fi network or a Wi-Fi Direct connection, to allow one to seamlessly access the features of another.

So you can start watching streaming video on the living room TV, for example, then switch it to your tablet when you move to another room, or use your TV’s speakers for a hands-free phone call with your smartphone. The possibilities are endless.

The CrossMount Alliance for MediaTek partners, customers and developers makes developing CrossMount applications as easy as possible, many brands and developers are already on board.

CrossMount will be available in late 2015 for MediaTek-based Android devices

MediaTek Introduces a New Convergence Standard for Cross-device Sharing with CrossMount

Fast and easy sharing of content, hardware and software resources enable multiple devices to combine and act together as a single, more powerful device

SPAIN, Barcelona – March 1, 2015– MediaTek today announced CrossMount – a new technology that simplifies hardware and software resource sharing between different consumer devices. Designed to be a new standard in cross-device convergence, the CrossMount framework ensures any compatible device can seamlessly use and share hardware or software resources authorized by the user. CrossMount is an open and simple-to-implement technology for the wide ecosystem of MediaTek customers and partners that opens the possibilities for multiple devices effectively working as one or sharing applications and hardware resources.

CrossMount defines its service mounting standard based on UPnP protocol, and can be implemented primarily in Android and Linux as well as other platforms. CrossMount works through simple discovery, pairing, authorization and use between devices of both hardware and software resources across smartphones, tablets and TVs. Communication between devices is achieved directly between devices via home gateways (Wireless LAN) or peer to peer (Wi-Fi Direct). Discovery and sharing are granted through an easy software implementation that allows all Wi-Fi capable devices to share resources without the need for cloud servers.

“Consumers have adopted a wide array of Internet-connected devices at home, in schools and workplaces, CrossMount sets the new standard for easy cross-device interaction and resource sharing. We are particularly keen to open up this innovation to our wide ecosystem of customers and partners around the world, unleashing their imagination to create new immersive experiences that further enrich peoples’ lives” said, Joe Chen, Senior Vice President, MediaTek.

With CrossMount enabled devices, for example, viewers can simply pair their TV sound to their smartphone earphones or use their smartphone microphone as a voice controller to search content on their smart TV. This is a breakthrough in user experience as the CrossMount standard means several devices can act as one together rather than simply share content.

“CrossMount is a lot more than mirroring from phone to TV – as has already been developed within the industry”, added Joe Chen. “CrossMount goes the extra mile with hardware and software capability sharing between smart devices, thereby creating many useful and more complex use cases, such as mounting a smartphone camera to a TV and enabling the TV for video conferencing session.”

To further drive the adoption of CrossMount as an industry standard, MediaTek is establishing the CrossMount Alliance to bring its wide ecosystem of partners and customers together and explore new possibilities to drive the technology forward. CrossMount will be open for developers to further expand the ability for innovative and new applications to be created, potentially changing the way we use and share devices and content. Chang-hong, Hisense, Lenovo and TCL are the first MediaTek customers to support CrossMount.

CrossMount will be made available to MediaTek customers and partners in the third quarter for Android-based smartphone, tablet and TV products, with devices expected on the market by end of this year.

II/2. LinkIt™ One Development Platform for wearables and IoT

March 20, 2015: MediaTek Labs – IoT Lab at 4YFN Barcelona

See how MediaTek Labs supported the winning team, Playdrop, who took advantage of the MediaTek LinkIt™ ONE development board to build an innovative water monitoring and control system prototype. Playdrop was just one of the competitors at IoT Lab, where developers from all over the world formed new teams and had less than two days to ideate, create business cases and working prototypes for the Internet of Things (IoT).

You can also hear from VP of MediaTek Labs, Marc Naddell, about how our development platforms will be supporting more developers on their journey into Wearables and IoT devices.

To find out more about MediaTek Labs & our offerings:

LinkIt ONE development platform: http://labs.mediatek.com/one

MediaTek Cloud Sandbox: http://labs.mediatek.com/sandbox

Get the tools you need to build your own Wearables and IoT devices, register now:http://labs.mediatek.com/register

Feb 18, 2015: What is MediaTek LinkIt™ ONE Development Platform?

MediaTek LinkIt™ ONE development platform enables you to design and prototype Wearables and Internet of Things (IoT) devices, using hardware and an API that are similar to those offered for Arduino boards.

The platform is based around the world’s smallest commercial System-on-Chip (SoC) for Wearables, MediaTek Aster (MT2502). This SoC works with MediaTek’s energy efficient Wi-Fi and GNSS companion chipsets also. This means you can easily create devices that connect to other smart devices or directly to cloud applications and services.

To make it easy to prototype Wearables and IoT devices and their applications, the platform delivers:

- The LinkIt ONE Software Development Kit (SDK) for the creation of apps for LinkIt ONE devices. This SDK integrates with the Arduino software to deliver an API and development process that will be instantly familiar.

- The LinkIt ONE Hardware Development Kit (HDK) for prototyping devices. Based on a MediaTek hardware reference design, the HDK delivers the LinkIt ONE development board from Seeed Studio.

Key features of LinkIt ONE development platform:

- Optimized performance and power consumption to offer consumers appealing, functional Wearables and IoT devices

- Based on MediaTek Aster (MT2502) SoC, offering comprehensive communications and media options, with support for GSM, GPRS, Bluetooth 2.1 and 4.0, SD Cards, and MP3/AAC Audio, as well as Wi-Fi and GNSS (hardware dependent)

- Delivers an API, to access key features of the Aster SoC, that is similar to that for Arduino; enabling existing Arduino apps to be quickly ported and new apps created with ease

- LinkIt ONE developer board from partner Seeed Studio with similar pin-out to the Arduino UNO enabling a wide range of peripheral and circuits to be connected to the board

- LinkIt ONE SDK (for Arduino) offering instant familiarity to Arduino developers and a easy to learn toolset for beginners

LinkIt ONE SDK LinkIt ONE HDK

LinkIt ONE architecture

Running on top of the Aster (MT2502) and, where used, its companion GNSS and Wi-Fi chipsets, the LinkIt ONE developer platform is based on an RTOS kernel. On top of this kernel is a set of drivers, middleware and protocol stacks that expose the features of the chipsets to a Framework. A run-time Environment then provides services to the Arduino porting layer that delivers the LinkIt ONE API for Arduino. The API is used to develop Arduino Sketches with the LinkIt ONE SDK (for Arduino).

*MT3332 (GPS) and MT5931 (WiFi) are optional

*MT3332 (GPS) and MT5931 (WiFi) are optional

Hardware core: Aster (MT2502)

The hardware core for LinkIt ONE development platform is MediaTek Aster (MT2502). This chipset also works with our Wi-Fi and GNSS chips, offering high performance and low power consumption to Wearables and IoT devices.

Aster’s highly integrated System-on-Chip (SoC) design avoids the need for multiple chips, meaning smaller devices and reduced costs for device creators, as well as eliminating the need for compatibility tests.

Aster’s highly integrated System-on-Chip (SoC) design avoids the need for multiple chips, meaning smaller devices and reduced costs for device creators, as well as eliminating the need for compatibility tests.

With Aster, it’s now easier and cheaper for device manufacturers and the maker community to produce desirable, functional wearable products.

Key features

- The smallest commercial System-on-Chip (5.4mm*6.2mm) currently on the market

- CPU core: ARM7 EJ-S 260MHz

- Memory: 4MB RAM, 4MB Flash

- PAN: Dual Bluetooth 2.1 (SPP) and 4.0 (GATT)

- WAN: GSM and GPRS modem

- Power: PMU and charger functions, low power mode with sensor hub function

- Multimedia: Audio (list formats), video (list formats), camera (list formats/resolutions)

- Interfaces: External ports for LCD, camera, I2C, SPI, UART, GPIO, and more

Get started with the LinkIt ONE development platform

Nov 12, 2014: MediaTek Labs – LinkIt workshop presentation

MediaTek Labs technical expert Pablo (Yuhsian) Sun provides an overview of the LinkIt Development Platform, in this presentation recorded at XDA:DevCon. Pablo describes what LinkIt is and discusses why its capabilities — such as support for Wi-Fi, SMS, Groove peripherals, and more — make it the ideal, cost effective tool for prototyping wearable and IoT devices. He also covers the LinkIt ONE board, offering an in-depth look at its hardware, introduced the APIs, and shows you how software is developed with Ardunio and the LinkIt SDK. Link to additional resources are also provided. If you haven’t used the LinkIt development platform, this video provides you with all the basics to get started.

MediaTek Launches LinkIt™ Platform for Wearables and Internet of Things

TAIWAN, Hsinchu – June 3, 2014 – MediaTek today announced LinkIt™, a development platform built to accelerate the wearable and Internet of Things (IoT) markets. LinkIt integrates the MediaTek’s Aster System on Chip (SoC), the smallest wearable SoC currently on the market. The MediaTek Aster SoC is designed to enable the developer community to create a broad range of affordable wearable and IoT products and solutions, for the billions of consumers in the rising Super-mid market to realize their potential as Everyday Geniuses.

Key features of MediaTek Aster and LinkIt:

- MediaTek Aster, the smallest SoC in a package size of 5.4×6.2mm specifically designed for wearable devices.

- LinkIt integrates the MediaTek’s Aster SoC and is a developer platform supported by reference designs that enable creation of various form factors, functionalities, and internet connected services.

- Synergies between microprocessor unit and communication modules, facilitating development and saving time in new device creation.

- Modularity in software architecture provides developers with high degree of flexibility.

- Supports over-the-air (OTA) updates for apps, algorithms and drivers which enable “push and install” software stack (named MediaTek Capsule) from phones or computers to devices built with MediaTek Aster.

- Plug-in software development kit (SDK) for Arduino and VisualStudio. Support for Eclipse is planned for Q4 this year.

- Hardware Development Kit (HDK) based on LinkIt board by third party.

“MediaTek is now in a unique position to assume leadership by accelerating development for wearables and IoT, thanks to our LinkIt platform,” said J.C. Hsu, General Manager of New Business Development at MediaTek. “We are enabling an ecosystem of device makers, application developers and service providers to create innovations and new solutions for the Super-mid market.”

Eric Li, Vice President, China’s Internet giant said, “Baidu provides a wealth of services for its users on our Internet portal, and our offerings will enable MediaTek-powered devices to do much more than they already can. The IoT is inter-connecting devices, and we’re connecting people with information via such devices. Our partnership with MediaTek will bring both of us closer to our respective goals.”

Gonzague de Vallois, Senior Vice President of Gameloft, another one of MediaTek’s ecosystem partners, said, “The wearable devices era is a fascinating one for a game developer. Proliferation of devices equipped with all sorts of different sensors and measured information from human body are creating possibilities for us to develop games that are played differently and in ways that were never imagined before. We are pleased to be a partner of MediaTek, who is enabling the wearable devices future for us to continuously bring innovative games to gamers around the world.”

The launch of LinkIt is a part of MediaTek’s wider initiative for the developer community called MediaTek Labs™ which will officially launch later this year. MediaTek Labs will stimulate and support the creation of wearable devices and IoT applications based on the LinkIt platform. Developers and device makers who are interested in joining the MediaTek Labs program are invited to email labs-registration@mediatek.com to receive a notification once the program launches. For more information and ongoing updates, please go to http://labs.mediatek.com.

Oct 30, 2014:

LinkIt ONE Plus Version from SeedStudio (http://www.seeedstudio.com/depot/LinkIt-ONE-p-2017.html)

Jan 3, 2015:

II/3. What is MediaTek LinkIt™ Connect 7681 development platform?

There is an increasing trend towards connecting every imaginable electrical or electronic device found in the home. For many of these applications developers simply want to add the ability to remotely control a device — turn on a table lamp, adjust the temperature setting of an air-conditioner or unlock a door. This is where the MediaTek MT7681 comes in.

MediaTek MT7681

MediaTek MT7681 is a compact Wi-Fi System-on-Chip (SoC) for IoT devices with embedded TCP/IP stack. By adding the MT7681 to an IoT device it can connect to other smart devices or to cloud applications and services. Connectivity on the MT7681 is achieved using Wi-Fi in either Wi-Fi station or access point (AP) mode.

In Wi-Fi station mode, MT7681 connects to a wireless AP and can then communicate with web services or cloud servers. A typical use of this option would be to enable a user to control the heating in their home from a home automation website.

To simplify the connection of an MT7681 chip to a wireless AP in Wi-Fi station mode, the MediaTek Smart Connection APIs are provided. These APIs enable a smart device app to remotely provision a MT7681 chip with AP details (SSID, authentication mode and password).

In AP mode, an MT7681 chip acts as an AP, enabling other wireless devices to connect to it directly. Using this mode, for example, the developer of a smart light bulb could offer users a smartphone application that enables bulbs to be controlled from within the home.

To control the device an MT7681 is incorporated into, the chip provides five GPIO pins and one UART port. In addition PWM is supported in software, for applications such as LED dimming.

MediaTek LinkIt Connect 7681 development platform

To enable developers and makers to take advantage of the features of the MT7681, MediaTek Labs offers the MediaTek LinkIt Connect 7681 development platform, consisting of an SDK, HDK and related documentation.

For software development MediaTek LinkIt Connect 7681 SDK is provided for Microsoft Windows and Ubuntu Linux. Based on the Andes Development Kit, the SDK enables developers to create firmware to control an IoT device in response to instructions received wirelessly.

For IoT device prototyping, the LinkIt Connect 7681 development board is provided. The development board consists of a LinkIt Connect 7681 module, micro-USB port and pins for each of the I/O interfaces of the MT7681 chip. This enables you to quickly connect external hardware and peripherals to create device prototypes. The LinkIt Connect 7681 module, which measures just 15x18mm, is designed to easily mount on a PCB as part of production versions of an IoT device.

Key Features of MT7681

- Wi-Fi station and access point (AP) modes

- 802.11 b/g/n (in station mode) and 802.11 b/g (in AP mode)

- Smart connection APIs to easily create Android or iOS apps to provision a device with wireless AP settings

- TCP/IP stack

- Firmware upgrade over UART, APIs for FOTA implementation

- Software PWM emulation for LED dimming

- Firmware upgrade over UART, APIs for FOTA implementation

Jan 15, 2015:

II/4. Join MediaTek Labs

: The best free resources for Wearables and IoT

: The best free resources for Wearables and IoT

Get the tools and resources you and your company need to go from idea to prototype to product.

Register now to get access to:

- MediaTek SDKs

- MediaTek hardware reference designs

- Comment posting in our active developer forum

- Private messaging with other MediaTek Labs members

- Our solutions catalog, where you can share your project privately with MediaTek to unlock our support and matchmaking services

MediaTek Labs gives you the help you need to develop innovative hardware and software based on MediaTek products. From smart light bulbs, to the next-generation fitness tracker and the exciting world of the smartwatch, you can make your journey with our help.

As a registered Labs member you’ll be able to put your project in front of our business development team, who’ll help you find the partners you need to get you on the road to success. We’re here to help guide you through the exciting possibilities offered by the next wave in developer opportunities: Wearables and IoT.

MediaTek Labs is free to join:

Register today!

About MediaTek Labs

MediaTek is a young and entrepreneurial company that has grown quickly into a market leader. We identify with creative and driven pioneers in the maker and developer communities, and recognize the benefits of building an ecosystem that fosters your talents and your efforts to innovate.

MediaTek Labs is the developer hub for all our products. It builds on our track record for delivering industry-leading reference designs that offer the shortest time-to-market for our extensive customer and partner base.

MediaTek Launches Labs Developer Program to Jumpstart Wearable and IoT Device Creation

Unveils LinkIt™ platform; simplifies the development of hardware and software for developers, designers and makers

TAIWAN, Hsinchu — Sept 22, 2014 — MediaTek today launched MediaTek Labs (http://labs.mediatek.com), a global initiative that allows developers of any background or skill level to create wearable and Internet of Things (IoT) devices. The new program provides developers, makers and service providers with software development kits (SDKs), hardware development kits (HDKs), and technical documentation, as well as technical and business support.

“With the launch of MediaTek Labs we’re opening up a new world of possibilities for everyone — from hobbyists and students through to professional developers and designers — to unleash their creativity and innovation,” says Marc Naddell, vice president of MediaTek Labs. “We believe that the innovation enabled by MediaTek Labs will drive the next wave of consumer gadgets and apps that will connect billions of things and people around the world.”

The Labs developer program also features the LinkIt™ Development Platform, which is based on theMediaTek Aster (MT2502) chipset. The LinkIt development Platform is the one of the best connected platforms, offering excellent integration for the package size and doing away with the need for additional connectivity hardware. LinkIt makes creating prototype wearable and IoT devices easy and cost effective by leveraging MediaTek’s proven reference design development model. The LinkIt platform consists of the following components:

- System-on-Chip (SoC) — MediaTek Aster (MT2502), the world’s smallest commercial SoC for Wearables, and companion Wi-Fi (MT5931) and GPS (MT3332) chipsets offering powerful, battery efficient technology.

- LinkIt OS — an advanced yet compact operating system that enables control software and takes full advantage of the features of the Aster SoC, companion chipsets, and a wide range of sensors and peripheral hardware.

- Hardware Development Kit (HDK) — Launching first with LinkIt ONE, a co-design project with Seeed Studio, the HDK will make it easy to add sensors, peripherals, and Arduino Shields to LinkIt ONE and create fully featured device prototypes.

- Software Development Kit (SDK) — Makers can easily migrate existing Arduino code to LinkIt ONE using the APIs provided. In addition, they get a range of APIs to make use of the LinkIt communication features: GSM, GPRS, Bluetooth, and Wi-Fi.

To ensure developers can make the most of the LinkIt offering, the MediaTek Labs website includes a range of additional services, including:

- Comprehensive business and technology overviews

- A Solutions Catalog where developers can share information on their devices, applications, and services and become accessible for matchmaking to MediaTek’s customers and partners

- Support services, including comprehensive FAQ, discussion forums that are monitored by MediaTek technical experts, and — for developers with solutions under development in the Solutions Catalog — free technical support.

“While makers still use their traditional industrial components for new connected IoT devices, with the LinkIt ONE hardware kit as part of MediaTek LinkIt Developer Platform, we’re excited to help Makers bring prototypes to market faster and more easily,” says Eric Pan, founder and chief executive officer of Seeed Studio.

Makers, designers and developers can sign up to MediaTek Labs today and download the full range of tools and documentation at http://labs.mediatek.com.

Mar 2, 2015:

MediaTek Labs Partner Connect

Taking any Wearables or IoT project beyond the prototype stage can be a daunting prospect, whether you’re a small startup or an established company making its first foray into new devices.

To make the path to market easier, MediaTek Labs Partner Connect will help find you the partners you need to make your idea a reality. The program includes some of the world’s best EMS, OEM and ODM companies, as well as distributors of MediaTek products and suppliers of device components. But the real benefit comes from our MediaTek Labs experts, who will work with you to match your requirements with the right partner or partners.

Getting started is simple. Once you have registered your company on MediaTek Labs, submit your Wearables or IoT project to our confidential device Devices Catalog. And it doesn’t matter where you are in the development process — perhaps you have an early prototype running on a LinkIt development board or have full CAD and BOM for your product — our experts can help. Simply select the “Seeking Partner” option when you submit your device for review by MediaTek Labs and, once approved, one of our partner managers will review your requirements and get to work finding the right partners for you.

Designers and developers

Our design partners can assist with specific expertise in electrical engineering, mechanical engineering and computer aided design (CAD), industrial design, regulatory compliance testing, software and more. Whether you’re looking for specific expertise to assist with a single aspect of your project or want a turnkey solution that delivers the vision of your prototype directly to manufacturing, these partners can help.

Manufacturers

From the late stages of development, where you need batches of production prototypes, through low volume pilot runs for consumer testing and marketing, to full production these partners can help. From your designs they’re able to turn your Wearables or IoT idea into a commercial consumer or enterprise product. They’ll do this employing the latest in manufacturing technology, from flexible facilities that can adapt to your needs as you find success in the market.

MediaTek distributors

If you have your own manufacturing facilities or partner and are looking to source MediaTek chipset modules in volumes beyond retail, these partners will be able to help you. In addition to providing for your volume requirements, they’ll also provide additional technical information and support to ensure you make optimal use of MediaTek chipsets in your product and manufacturing process.

Component suppliers

This group of partners will be able to assist you from prototype to production: from selecting the components for your pre-production prototypes through to production run quantities of specific components. Batteries, sensors, screens and much more can be supplied by these partners, from evaluation batches to production quantities delivered to your manufacturing facility or manufacturing partner.