Home » servers

Category Archives: servers

DataStax: a fully distributed and highly secure transactional database platform that is “always on”

When an open-source database written in Java that runs primarily in production on Linux becomes THE solution for the cloud platform from Microsoft (i.e. Azure) in the fully distributed, highly secure and “always on” transactional database space then we should take a special note of that. This is the case of DataStax:

July 15, 2015: Building the intelligent cloud Scott Guthrie’s keynote on the Microsoft Worldwide Partner Conference 2015, the DataStax related segment in 7 minutes only

SCOTT GUTHRIE, EVP of Microsoft Cloud and Enterprise: What I’d like to do is invite three different partners now on stage, one an ISV, one an SI, and one a managed service provider to talk about how they’re taking advantage of our cloud offerings to accelerate their businesses and make their customers even more successful.

First, and I think, you know, being able to take advantage of all of these different capabilities that we now offer.

Now, the first partner I want to bring on stage is DataStax. DataStax delivers an enterprise-grade NoSQL offering based on Apache Cassandra. And they enable customers to build solutions that can scale across literally thousands of servers, which is perfect for a hyper-scale cloud environment.

And one of the customers that they’re working with is First American, who are deploying a solution on Microsoft Azure to provide richer insurance and settlement services to their customers.

What I’d like to do is invite Billy Bosworth, the CEO of DataStax, on stage to join me to talk about the partnership that we’ve had and how some of the great solutions that we’re building together. Here’s Billy. (Applause.)

Well, thanks for joining me, Billy. And it’s great to have you here.

BILLY BOSWORTH, CEO of DataStax: Thank you. It’s a real privilege to be here today.

SCOTT GUTHRIE: So tell us a little bit about DataStax and the technology you guys build.

BILLY BOSWORTH: Sure. At DataStax, we deliver Apache Cassandra in a database platform that is really purpose-built for the new performance and availability demands that are being generated by today’s Web, mobile and IOT applications.

With DataStax Enterprise, we give our customers a fully distributed and highly secure transactional database platform.

Now, that probably sounds like a lot of other database vendors out there as well. But, Scott, we have something that’s really different and really important to us and our customers, and that’s the notion of being always on. And when you talk about “always on” and transactional databases, things can get pretty complicated pretty fast, as you well know.

The reason for that is in an always-on world, the datacenter itself becomes a single point of failure. And that means you have to build an architecture that is going to be comprehensive and include multiple datacenters. That’s tough enough with almost any other piece of the software stack. But for transactional databases, that is really problematic.

Fortunately, we have a masterless architecture in Apache Cassandra that allows us to have DataStax enterprise scale in a single datacenter or across multiple datacenters, and yet at the same time remain operationally simple. So that’s really the core of what we do.

SCOTT GUTHRIE: Is the always-on angle the key differentiator in terms of the customer fit with Azure?

BILLY BOSWORTH: So if you think about deployment to multiple datacenters, especially and including Azure, it creates an immediate benefit. Going back to your hybrid clouds comment, we see a lot of our customers that begin their journey on premises. So they take their local datacenter, they install DataStax Enterprise, it’s an active database up and running. And then they extend that database into Azure.

Now, when I say that, I don’t mean they do so for disaster recovery or failover, it is active everywhere. So it is taking full read-write requests on premises and in Azure at the same time.

So if you lose connectivity to your physical datacenter, then the Azure active nodes simply take over. And that’s great, and that solves the always-on problem.

But that’s not the only thing that Azure helps to solve. Our applications, because of their nature, tend to drive incredibly high throughput. So for us, hundreds of millions or even tens and hundreds of billions of transactions a day is actually quite common.

You guys are pretty good, Scott, but I don’t think you’ve changed the laws of physics yet. And so the way that you get that kind of throughput with unbelievable performance demands, because our customers demand millisecond and microsecond response times, is you push the data closer to the end points. You geographically distribute it.

Now, what our customers are realizing is they can try and build 19 datacenters across the world, which I’m sure was really cheap and easy to do, or they can just look at what you’ve already done and turn to a partnership like ours to say, “Help us understand how we do this with Azure.”

So not only do you get the always-on benefit, which is critical, but there’s also a very important performance element to this type of architecture as well.

SCOTT GUTHRIE: Can you tell us a little bit about the work you did with First American on Azure?

BILLY BOSWORTH: Yeah. First American is a leading name in the title insurance and settlement services businesses. In fact, they manage more titles on more properties than anybody in the world.

Every title comes with an associated set of metadata. And that metadata becomes very important in the new way that they want to do business because each element of that needs to be transacted, searched, and done in real-time analysis to provide better information back to the customer in real time.

And so for that on the database side, because of the type of data and because of the scale, they needed something like DataStax Enterprise, which we’ve delivered. But they didn’t want to fight all those battles of the architecture that we discussed on their own, and that’s where they turned to our partnership to incorporate Microsoft Azure as the infrastructure with DataStax Enterprise running on top.

And this is one of many engagements that you know we have going on in the field that are really, really exciting and indicative of the way customers are thinking about transforming their business.

SCOTT GUTHRIE: So what’s it like working with Microsoft as a partner?

BILLY BOSWORTH: I tell you, it’s unbelievable. Or, maybe put differently, highly improbable that you and I are on stage together. I want you guys to think about this. Here’s the type of company we are. We’re an open-source database written in Java that runs primarily in production on Linux.

Now, Scott, Microsoft has a couple of pretty good databases, of which I’m very familiar from my past, and open source and Java and Linux haven’t always been synonymous with Microsoft, right?

So I would say the odds of us being on stage were almost none. But over the past year or two, the way that you guys have opened up your aperture to include technologies like ours — and I don’t just say “include.” His team has embraced us in a way that is truly incredible. For a company the size of Microsoft to make us feel the way we do is just remarkable given the fact that none of our technologies have been something that Microsoft has traditionally said is part of their family.

So I want to thank you and your team for all the work you’ve done. It’s been a great experience, but we are architecting systems that are going to drive businesses for the coming decades. And that is super exciting to have a partner like you engaged with us.

SCOTT GUTHRIE: Fantastic. Well, thank you so much for joining us on stage.

BILLY BOSWORTH: Thanks, Scott. (Applause.)

The typical data framework capabilities of DataStax in all respects is best understood via the the following webinar which presents Apache Spark as well as the part of the complete data platform solution:

– Apache Cassandra is the leading distributed database in use at thousands of sites with the world’s most demanding scalability and availability requirements.

– Apache Spark is a distributed data analytics computing framework that has gained a lot of traction in processing large amounts of data in an efficient and user-friendly manner.

– The joining of both provides a powerful combination of real-time data collection with analytics.

After a brief overview of Cassandra and Spark, (Cassandra till 16:39, Spark till 19:25) this class will dive into various aspects of the integration (from 19:26).

August 19, 2015: Big Data Analytics with Cassandra and Spark by Brian Hess, Senior Product Manager of Analytics, DataStax

September 23, 2015: DataStax Announces Strategic Collaboration with Microsoft, company press release

- DataStax delivers a leading fully-distributed database for public and private cloud deployments

- DataStax Enterprise on Microsoft Azure enables developers to develop, deploy and monitor enterprise-ready IoT, Web and mobile applications spanning public and private clouds

- Scott Guthrie, EVP Cloud and Enterprise, Microsoft, to co-deliver Cassandra Summit 2015 keynote

SANTA CLARA, CA – September 23, 2015 – (Cassandra Summit 2015) DataStax, the company that delivers Apache Cassandra™ to the enterprise, today announced a strategic collaboration with Microsoft to deliver Internet of Things (IoT), Web and mobile applications in public, private or hybrid cloud environments. With DataStax Enterprise (DSE), a leading fully-distributed database platform, available on Azure, Microsoft’s cloud computing platform, enterprises can quickly build high-performance applications that can massively scale and remain operationally simple across public and private clouds, with ease and at lightning speed.

Click to Tweet: #DataStax Announces Strategic Collaboration with @Microsoft at #CassandraSummit bit.ly/1V8KY4D

PERSPECTIVES ON THE NEWS

“At Microsoft we’re focused on enabling customers to run their businesses more productively and successfully,” said Scott Guthrie, Executive Vice President, Cloud and Enterprise, Microsoft. “As more organizations build their critical business applications in the cloud, DataStax has proved to be a natural Azure partner through their ability to enable enterprises to build solutions that can scale across thousands of servers which is necessary in today’s hyper-scale cloud environment.”

“We are witnessing an increased adoption of DataStax Enterprise deployments in hybrid cloud environments, so closely aligning with Microsoft benefits any organization looking to quickly and easily build high-performance IoT, Web and mobile apps,” said Billy Bosworth, CEO, DataStax. “Working with a world-class organization like Microsoft has been an incredible experience and we look forward to continuing to work together to meet the needs of enterprises looking to successfully transition their business to the cloud.”

“As a leader in providing information and insight in critical areas that shape today’s business landscape, we knew it was critical to transform our back-end business processes to address scale and flexibility” said Graham Lammers, Director, IHS. “With DataStax Enterprise on Azure we are now able to create a next generation big data application to support the decision-making process of our customers across the globe.”

BUILD SIMPLE, SCALABLE AND ALWAY-ON APPS IN RECORD SPEED

To address the ever-increasing demands of modern businesses transitioning from on-premise to hybrid cloud environments, the DataStax Enterprise on Azure on-demand cloud database solution provides enterprises with both development and production ready Bring Your Own License (BYOL) DSE clusters that can be launched in minutes on theMicrosoft Azure Marketplace using Azure Resource Management (ARM) Templates. This enables the building of high-performance IoT, Web and mobile applications that can predictably scale across global Azure data centers with ease and at remarkable speed. Additional benefits include:

- Hybrid Deployment: Easily move DSE workloads between data centers, service providers and Azure, and build hybrid applications that leverage resources across all three.

- Simplicity: Easily manage, develop, deploy and monitor database clusters by eliminating data management complexities.

- Scalability: Quickly replicate online applications globally across multiple data centers into the cloud/hybrid cloud environment.

- Continuous Availability: DSE’s peer-to-peer architecture offers no single point of failure. DSE also provides maximum flexibility to distribute data where it’s needed most by replicating data across multiple data centers, the cloud and mixed cloud/on-premise environments.

MICROSOFT ENTERPRISE CLOUD ALLIANCE & FAST START PROGRAM

DataStax also announced it has joined Microsoft’s Enterprise Cloud Alliance, a collaboration that reinforces DataStax’scommitment to provide the best set of on-premise, hosted and public cloud database solutions in the industry. The goal of Microsoft’s Enterprise Cloud Alliance partner program is to create, nurture and grow a strong partner ecosystem across a broad set of Enterprise Cloud Products delivering the best on-premise, hosted and Public Cloud solutions in the industry. Through this alliance, DataStax and Microsoft are working together to create enhanced enterprise-grade offerings for the Azure Marketplace that reduce the complexities of deployment and provisioning through automated ARM scripting capabilities.

Additionally, as a member of Microsoft Azure’s Fast Start program, created to help users quickly deploy new cloud workloads, DataStax users receive immediate access to the DataStax Enterprise Sandbox on Azure for a hands-on experience testing out DSE on Azure capabilities. DataStax Enterprise Sandbox on Azure can be found here.

Cassandra Summit 2015, the world’s largest gathering of Cassandra users, is taking place this week and Microsoft Cloud and Enterprise Executive Vice President Scott Guthrie, DataStax CEO Billy Bosworth, and Apache Cassandra Project Chair and DataStax Co-founder and CTO Jonathan Ellis, will deliver the conference keynote at 10 a.m. PT on Wednesday, September 23. The keynote can be viewed at DataStax.com.

ABOUT DATASTAX

DataStax delivers Apache Cassandra™ in a database platform purpose-built for the performance and availability demands for IoT, Web and mobile applications. This gives enterprises a secure, always-on database technology that remains operationally simple when scaling in a single datacenter or across multiple datacenters and clouds.

With more than 500 customers in over 50 countries, DataStax is the database technology of choice for the world’s most innovative companies, such as Netflix, Safeway, ING, Adobe, Intuit and eBay. Based in Santa Clara, Calif., DataStax is backed by industry-leading investors including Comcast Ventures, Crosslink Capital, Lightspeed Venture Partners, Kleiner Perkins Caufield & Byers, Meritech Capital, Premji Invest and Scale Venture Partners. For more information, visit DataStax.com or follow us @DataStax.

September 30, 2014: Why Datastax’s increasing presence threatens Oracle’s database by Anne Shields at Market Realist

Must know: An in-depth review of Oracle’s 1Q15 earnings (Part 9 of 12)

Datastax databases are built on open-source technologies

Datastax is a California-based database management company. It offers an enterprise-grade NoSQL database that seamlessly and securely integrates real-time data with Apache Cassandra. Databases built on Apache Cassandra offer more flexibility than traditional databases. Even in case of calamities and uncertainties, like floods and earthquakes, data is available due to its replication at other data centers. NoSQL and Cassandra are open-source software.

Cassandra database was developed by Facebook (FB) to handle its enormous volumes of data. The technology behind Cassandra was developed by Amazon (AMZN) and Google (GOOGL). Oracle’s MySQL (ORCL), Microsoft’s SQL Server (MSFT), and IBM’s DB2 (IBM) are the traditional databases present in the market .

The above chart shows how NoSQL databases, NewSQL databases, and Data grid/cache products fit into the wider data management landscape.

Huge amounts of funds raised in the open-source technology database space

Datastax raised $106 million in September 2014 to expand its database operations. MongoDB Inc. and Couchbase Inc.—both open-source NoSQL database developers—raised $231 million and $115 million, respectively, in 2014. According to Market Research Media, a consultancy firm, spending on NoSQL technology in 2013 was less than $1 billion. It’s expected to reach $3.4 billion by 2020. This explains why this segment is attracting such huge investments.

Oracle’s dominance in the database market is uncertain

Oracle claims it’s a market leader in the relational database market, with a revenue share of 48.3%. In 2013, it launched Oracle Database 12C. According to Oracle, “Oracle Database 12c introduces a new multitenant architecture that simplifies the process of consolidating databases onto the cloud; enabling customers to manage many databases as one — without changing their applications.” To know in detail about Database 12c, please click here .

In July 2013, DataStax announced that dozens of companies have migrated from Oracle databases to DataStax databases. Customers cited scalability, disaster avoidance, and cost savings as the reasons for shifting databases. Datastax databases’ rising popularity jeopardizes Oracle’s dominant position in the database market.

Browse this series on Market Realist:

September 24, 2014: Building a better experience for Azure and DataStax customers by Matt Rollender, VP Cloud Strategy, DataStax, Inc. on Microsoft Azure blog

Cassandra Summit is in high gear this week in Santa Clara, CA, representing the largest NoSQL event of its kind! This is the largest Cassandra Summit to date. With more than 7,000 attendees (both onsite and virtual), this is the first time the Summit is a three-day event with over 135 speaking sessions. This is also the first time DataStax will debut a formalized Apache Cassandra™ training and certification program in conjunction with O’Reilly Media. All incredibly exciting milestones!

We are excited to share another milestone. Yesterday, we announced our formal strategic collaboration with Microsoft. Dedicated DataStax and Microsoft teams have been collaborating closely behind the scenes for more than a year on product integration, QA testing, platform optimization, automated provisioning, and characterization of DataStax Enterprise (DSE) on Azure, and more to ensure product validation and a great customer experience for users of DataStax Enterprise on the Azure cloud. There is strong coordination across the two organizations – very close executive, field, and technical alignment – all critical components for a strong partnership.

This partnership is driven and shaped by our joint customers. Our customers oftentimes begin their journey with on-premise deployments of our database technology and then have a requirement to move to the cloud – Microsoft is a fantastic partner to help provide the flexibility of a true hybrid environment along with the ability to migrate to and scale applications in the cloud. Additionally, Microsoft has significant breadth regarding their data centers – customers can deploy in numerous Azure data centers around the globe, in order to be ‘closer’ to their end users. This is highly complementary to DataStax Enterprise software as we are a peer-to-peer distributed database and our customers need to be close to their end users with their always-on, always available enterprise applications.

To highlight a couple of joint customers and use cases we have First American Title and IHS, Inc. First American is a leading provider of title insurance and settlement services with revenue over $5B. They ingest and store the largest number (billions) of real estate property records in the industry. Accessing, searching and analyzing large data-sets to get relevant details quickly is the new way they want to do business – to provide better information back to their customers in real-time and allow end users to easily search through the property records on-line. They chose DSE and Azure because of the large data requirements and because of the need to continue to scale the application.

A second great customer and use case is IHS, Inc., a $2B revenue-company that provides information and analysis to support the decision-making process of businesses and governments. This is a transformational project for IHS as they are building out an ‘internet age’ parts catalog – it’s a next generation big data application, using NoSQL, non-relational technology and they want to deploy in the cloud to bring the application to market faster.

As you can see, we are enabling enterprises to engage their customer like never before with their always on, highly available and distributed applications. Stay tuned for more as we move forward together in the coming months!

For Additional information go to http://www.datastax.com/marketplace-microsoft-azure to try out Datastax Enterprise Sandbox on Azure.

See also DataStax Enterprise Cluster Production on Microsoft Azure Marketplace

September 23, 2015: Making Cassandra Do Azure, But Not Windows by Timothy Prickett Morgan Co-Editor, Co-Founder, The Next Platform

When Microsoft says that it is embracing Linux as a peer to Windows, it is not kidding. The company has created its own Linux distribution for switches used to build the Azure cloud, and it has embraced Spark in-memory processing and Cassandra as its data store for its first major open source big data project – in this case to help improve the quality of its Office365 user experience. And now, Microsoft is embracing Cassandra, the NoSQL data store originally created by Facebook when it could no longer scale the MySQL relational database to suit its needs, on the Azure public cloud.

Billy Bosworth, CEO at DataStax, the entity that took over steering development of and providing commercial support for Cassandra, tells The Next Platform that the deal with Microsoft has a number of facets, all of which should help boost the adoption of the enterprise-grade version of Cassandra. But the key one is that the Global 2000 customers that DataStax wants to sell support and services to are already quite familiar with both Windows Server in their datacenters and they are looking to burst out to the Azure cloud on a global scale.

“We are seeing a rapidly increasing number of our customers who need hybrid cloud, keeping pieces of our DataStax Enterprise on premise in their own datacenters and they also want to take pieces of that same live transactional data – not replication, but live data – and in the Azure cloud as well,” says Bosworth. “They have some unique capabilities, and one of the major requirements of customers is that even if they use cloud infrastructure, it still has to be distributed by the cloud provider. They can’t just run Cassandra in one availability zone in one region. They have to span data across the globe, and Microsoft has done a tremendous job of investing in its datacenters.”

With the Microsoft agreement, DataStax is now running its wares on the three big clouds, with Amazon Web Services and Google Compute Engine already certified able to run the production-grade Cassandra. And interestingly enough, Microsoft is supporting the DataStax implementation of Cassandra on top of Linux, not Windows. Bosworth says that while Cassandra can be run on Windows servers, DataStax does not recommend putting DataStax Enterprise (DSE), the commercial release, on Windows. (It does have a few customers who do, nonetheless, and it supports them.) Bosworth adds that DataStax and the Cassandra community have been “working diligently” for the past year to get a Windows port of DSE completed and that there has been “zero pressure” for the Microsoft Azure team to run DSE on anything other than Linux.

It is important to make the distinction between running Cassandra and other elements of DSE on Windows and having optimized drivers for Cassandra for the .NET programming environment for Windows.

“All we are really talking about is the ability to run the back-end Cassandra on Linux or Windows, and to the developer, it is irrelevant on what that back end is running,” explains Bosworth. This takes away some of that friction, and what we find is that on the back end, we just don’t find religious conviction about whether it should run on Windows or Linux, and this is different from five years ago. We sell mostly to enterprises, and we have not had one customer raise their hand and say they can’t use DSE because it does not run on Windows.”

What is more important is the ability to seamless put Cassandra on public clouds and spread transactional data around for performance and resiliency reasons – the same reasons that Facebook created Cassandra for in the first place.

What Is In The Stack, Who Uses It, And How

The DataStax Enterprise distribution does not just include the Apache Cassandra data store, but has an integrated search engine that is API compatible with the open source Solr search engine and in-memory extensions that can speed up data accesses by anywhere from 30X to 100X compared to server clusters using flash SSDs or disk drives. The Cassandra data store can be used to underpin Hadoop, allowing it to be queried by MapReduce, Hive, Pig, and Mahout, and it can also underpin Spark and Spark Streaming as their data stores if customers decide to not go with the Hadoop Distributed File System that is commonly packaged with a Hadoop distribution.

It is hard to say for sure how many organizations are running Cassandra today, but Bosworth reckons that it is on the order of tens of thousands worldwide, based on a number of factors. DataStax does not do any tracking of its DataStax Community edition because it wants a “frictionless download” like many open source projects have. (Developers don’t want software companies to see what tools they are playing with, even though they might love open source code.) DataStax provides free training for Cassandra, however, where it does keep track, and developers are consuming over 10,000 units of this training per month, so that probably indicates that the Cassandra installed base (including tests, prototypes, and production) is in the five figures.

DataStax itself has over 500 paying customers – now including Microsoft after its partner tried to build its own Spark-Cassandra cluster using open source code and decided that the supported versions were better thanks to the extra goodies that DataStax puts into its distro. DataStax has 30 of the Fortune 100 using its distribution of Cassandra in one form or another, and it is always for transactional, rather than batch analytic, jobs and in most cases also for distributed data stores that make use of the “eventual consistency” features of Cassandra to replicate data across multiple clusters. The company has another 600 firms participating in its startup program, which gives young companies freebie support on the DSE distro until they hit a certain size and can afford to start kicking some cash into the kitty.

The largest installation of Cassandra is running at Apple, which as we previously reported has over 75,000 nodes, with clusters ranging in size from hundreds to over 1,000 nodes and with a total capacity in the petabytes range. Netflix, which used to employ the open source Cassandra, switched to DSE last May and had over 80 clusters with more than 2,500 nodes supporting various aspects of its video distribution business. In both cases, Cassandra is very likely housing user session state data as well as feeding product or play lists and recommendations or doing faceted search for their online customers.

We are always intrigued to learn how customers are actually deploying tools such as Cassandra in production and how they scale it. Bosworth says that it is not uncommon to run a prototype project on as few as ten nodes, and when the project goes into production, to see it grow to dozens to hundreds of nodes. The midrange DSE clusters range from maybe 500 to 1,000 nodes and there are some that get well over 1,000 nodes for large-scale workloads like those running at Apple.

In general, Cassandra does not, like Hadoop, run on disk-heavy nodes. Remember, the system was designed to support hot transactional data, not to become a lake with a mix of warm and cold data that would be sifted in batch mode as is still done with MapReduce running atop Hadoop.

The typical node configuration has changed as Cassandra has evolved and improved, says Robin Schumacher, vice president of products at DataStax. But before getting into feeds and speeds, Schumacher offered this advice. “There are two golden rules for Cassandra. First, get your data model right, and second, get your storage system right. If you get those two things right, you can do a lot wrong with your configuration or your hardware and Cassandra will still treat you right. Whenever we have to dive in and help someone out, it is because they have just moved over a relational data model or they have hooked their servers up to a NAS or a SAN or something like that, which is absolutely not recommended.”

Only four years ago, because of the limitations in Cassandra (which like Hadoop and many other analytics tools is coded in Java), the rule of thumb was to put no more than 512 GB of disk capacity onto a single node. (It is hard to imagine such small disk capacities these days, with 8 TB and 10 TB disks.) The typical Cassandra node has two processors, with somewhere between 12 and 24 cores, and has between 64 GB and 128 GB of main memory. Customers who want the best performance tend to go with flash SSDs, although you can do all-disk setups, too.

Fast forward to today, and Cassandra can make use of a server node with maybe 5 TB of capacity for a mix of reads and writes, and if you have a write intensive application, then you can push that up to 20 TB. (DataStax has done this in its labs, says Schumacher, without any performance degradation.) Pushing the capacity up is important because it helps reduce server node count for a given amount of storage, which cuts hardware and software licensing and support costs. Incidentally, only a quarter of DSE customers surveyed said they were using spinning disks, but disk drives are fine for certain kinds of log data. SSDs are used for most transactional data, but the bits that are most latency sensitive should use DSE to store data on PCI-Express flash cards, which have lower latency.

Schumacher says that in most cases, the commercial-grade DSE Cassandra is used for a Web or mobile application, and a DSE cluster is not set up for hosting multiple applications, but rather companies have a different cluster for each use case. (As you can see is the case with Apple and Netflix.) Most of the DSE shops to make use of the eventual consistency replication features of Cassandra to span multiple datacenters with their data stores, and span anywhere from eight to twelve datacenters with their transactional data.

Here’s where it gets interesting, and why Microsoft is relevant to DataStax. Only about 30 percent of the DSE installations are running on premises. The remaining 70 percent are running on public clouds. About half of DSE customers are running on Amazon Web Services, with the remaining 20 percent split more or less evenly between Google Compute Engine and Microsoft Azure. If DataStax wants to grow its business, the easiest way to do that is to grow along with AWS, Compute Engine, and Azure.

So Microsoft and DataStax are sharing their roadmaps and coordinating development of their respective wares, and will be doing product validation, benchmarking, and optimization. The two will be working on demand generation and marketing together, too, and aligning their compensation to sell DSE on top of Azure and, eventually, on top of Windows Server for those who want to run it on premises.

In addition to announcing the Microsoft partnership at the Cassandra Summit this week, DataStax is also releasing its DSE 4.8 stack, which includes certification for Cassandra to be used as the back end for the new Spark 1.4 in-memory analytics tool. DSE Search has a performance boosts for live indexing, and running DSE instances inside of Docker containers has been improved. The stack also includes Titan 1.0, the graph database overlay for Cassandra, HBase, and BerkeleyDB that DataStax got through its acquisition of Aurelius back in February. DataStax is also previewing Cassandra 3.0, which will include support for JSON documents, role-based access control, and a lot of little tweaks that will make the storage more efficient, DataStax says. It is expected to ship later this year.

Scott Guthrie about changes under Nadella, the competition with Amazon, and what differentiates Microsoft’s cloud products

Scott Guthrie Executive Vice President Microsoft Cloud and Enterprise group As executive vice president of the Microsoft Cloud and Enterprise group, Scott Guthrie is responsible for the company’s cloud infrastructure, server, database, management and development tools businesses. His engineering team builds Microsoft Azure, Windows Server, SQL Server, Active Directory, System Center, Visual Studio and .NET. Prior to leading the Cloud and Enterprise group, Guthrie helped lead Microsoft Azure, Microsoft’s public cloud platform. Since joining the company in 1997, he has made critical contributions to many of Microsoft’s key cloud, server and development technologies and was one of the original founders of the .NET project. Guthrie graduated with a bachelor’s degree in computer science from Duke University. He lives in Seattle with his wife and two children. Source: Microsoft

From The cloud, not Windows 10, is key to Microsoft’s growth [Fortune, Oct 1, 2014]

- about changes under Nadella:

Well, I don’t know if I’d say there’s been a big change from that perspective. I mean, I think obviously we’ve been saying for a while this mobile-first, cloud-first…”devices and services” is maybe another way to put it. That’s been our focus as a company even before Satya became CEO. From a strategic perspective, I think we very much have been focused on cloud now for a couple of years. I wouldn’t say this now means, “Oh, now we’re serious about cloud.” I think we’ve been serious about cloud for quite a while.

More information: Satya Nadella on “Digital Work and Life Experiences” supported by “Cloud OS” and “Device OS and Hardware” platforms–all from Microsoft [‘Experiencing the Cloud’, July 23, 2014]

- about the competition with Amazon:

… I think there’s certainly a first mover advantage that they’ve been able to benefit from. … In terms of where we’re at today, we’ve got about 57% of the Fortune 500 that are now deployed on Microsoft Azure. … Ultimately the way we think we do that [gain on the current leader] is by having a unique set of offerings and a unique point of view that is differentiated.

- about uniqueness of Microsoft offering:

One is, we’re focused on and delivering a hyper-scale cloud platform with our Azure service that’s deployed around the world. …

… that geographic footprint, as well as the economies of scale that you get when you install and have that much capacity, puts you in a unique position from an economic and from a customer capability perspective …

Where I think we differentiate then, versus the other two, is around two characteristics. One is enterprise grade and the focus on delivering something that’s not only hyper-scale from an economic and from a geographic reach perspective but really enterprise-grade from a capability, support, and overall services perspective. …

The other thing that we have that’s fairly unique is a very large on-premises footprint with our existing server software and with our private cloud capabilities. …

Satya Nadella on “Digital Work and Life Experiences” supported by “Cloud OS” and “Device OS and Hardware” platforms–all from Microsoft

Update: Gates Says He’s Very Happy With Microsoft’s Nadella [Bloomberg TV, Oct 2, 2014] + Bill Gates is trying to make Microsoft Office ‘dramatically better’ [The Verge, Oct 3, 2014]

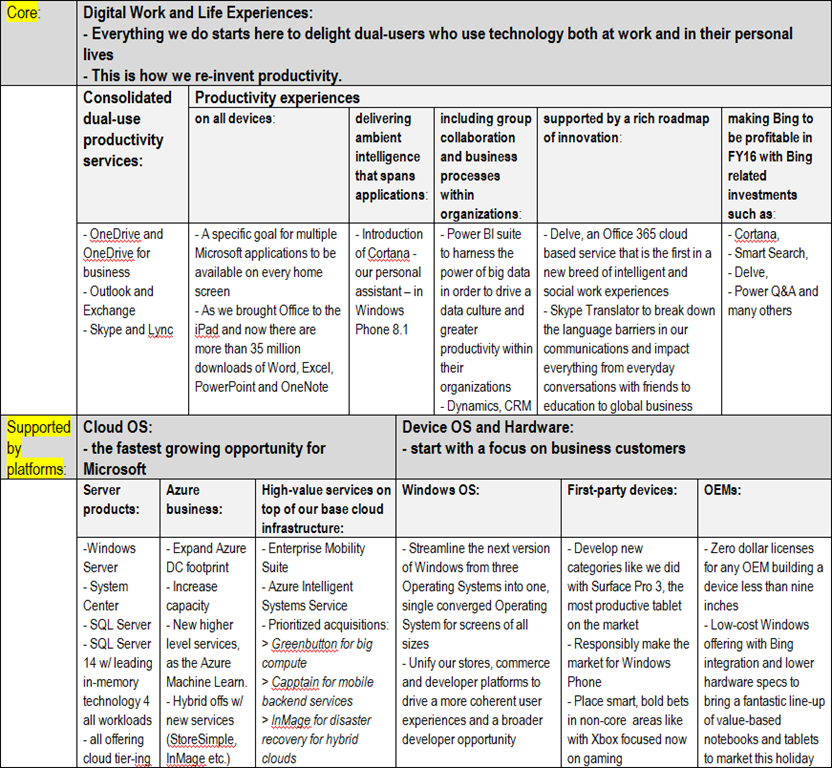

This is the essence of Microsoft Fiscal Year 2014 Fourth Quarter Earnings Conference Call(see also the Press Release and Download Files) for me, as the new, extremely encouraging, overall setup of Microsoft in strategic terms (the below table is mine based on what Satya Nadella told on the conference call):

These are extremely encouraging strategic advancements vis–à–vis previously publicized ones here in the following, Microsoft related posts of mine:

- Microsoft Surface Pro 3 is the ultimate tablet product from Microsoft. What the market response will be? [this same blog, May 21, 2014]

- What Microsoft will do with the Nokia Devices and Services now taken over, but currently producing a yearly loss rate of as much as $1.5 billion? [this same blog, April 29, 2014]

- Microsoft BUILD 2014 Day 2: “rebranding” to Microsoft Azure and moving toward a comprehensive set of fully-integrated backend services [this same blog, April 27, 2014]

- Microsoft is transitioning to a world with more usage and more software driven value add (rather than the old device driven world) in mobility and the cloud, the latter also helping to grow the server business well above its peers [this same blog, April 25, 2014]

- Intel’s desperate attempt to establish a sizeable foothold on the tablet market until its 14nm manufacturing leadership could provide a profitable position for the company in 2016 [this same blog, April 27, 2014]

- Intel CTE initiative: Bay Trail-Entry V0 (Z3735E and Z3735D) SoCs are shipping next week in $129 Onda (昂达) V819i Android tablets—Bay Trail-Entry V2.1 (Z3735G and Z3735F) SoCs might ship in $60+ Windows 8.1 tablets from Emdoor Digital (亿道) in the 3d quarter [this same blog, April 11, 2014]

- Enhanced cloud-based content delivery services to anyone, on any device – from Microsoft (Microsoft Azure Media Services) and its solution partners [this same blog, April 8, 2014]

- Microsoft BUILD 2014 Day 1: new and exciting stuff for MS developers [this same blog, April 5, 2014]

- IDF14 Shenzhen: Intel is levelling the Wintel playing field with Android-ARM by introducing new competitive Windows tablet price points from $99 – $129 [this same blog, April 4, 2014]

- Microsoft BUILD 2014 Day 1: consistency and superiority accross the whole Windows family extended now to TVs and IoT devices as well—$0 royalty licensing program for OEM and ODM partners in sub 9” phone and tablet space [this same blog, April 2, 2014]

- An upcoming new era: personalised, pro-active search and discovery experiences for Office 365 (Oslo) [this same blog, April 2, 2014]

- OneNote is available now on every platform (+free!!) and supported by cloud services API for application and device builders [this same blog, March 18, 2014]

- View from Redmond via Tim O’Brien, GM, Platform Strategy at Microsoft [this same blog, Feb 21, 2014]

- “Cloud first”: the origins and the current meaning [this same blog, Feb 18, 2014]

- “Mobile first”: the origins and the current meaning [this same blog, Feb 18, 2014]

- Microsoft’s half-baked cloud computing strategy (H1’FY14) [this same blog, Feb 17, 2014]

- The first “post-Ballmer” offering launched: with Power BI for Office 365 everyone can analyze, visualize and share data in the cloud [this same blog, Feb 10, 2014]

- John W. Thompson, Chairman of the Board of Microsoft: the least recognized person in the radical two-men shakeup of the uppermost leadership [this same blog, Feb 6, 2014]

- The extraordinary attempt by Nokia/Microsoft to crack the U.S. market in terms of volumes with Nokia Lumia 521 (with 4G/LTE) and Nokia Lumia 520 [this same blog, Jan 18, 2014]

- 2014 will be the last year of making sufficient changes for Microsoft’s smartphone and tablet strategies, and those changes should be radical if the company wants to succeed with its devices and services strategy [this same blog, Jan 17, 2014]

- Will, with disappearing old guard, Satya Nadella break up the Microsoft behemoth soon enough, if any? [this same blog, Feb 5, 2014]

- Microsoft products for the Cloud OS [this same blog, Dec 18, 2013]

- Satya Nadella’s (?the next Microsoft CEO?) next ten years’ vision of “digitizing everything”, Microsoft opportunities and challenges seen by him with that, and the case of Big Data [this same blog, Dec 13, 2013]

- Leading PC vendors of the past: Go enterprise or die! [this same blog, Nov 7, 2013]

- Microsoft could be acquired in years to come by Amazon? The joke of the day, or a certain possibility (among other ones)? [this same blog, Sept 16, 2013]

- The question mark over Wintel’s future will hang in the air for two more years [this same blog, Sept 15, 2013]

- The long awaited Windows 8.1 breakthrough opportunity with the new Intel “Bay Trail-T”, “Bay Trail-M” and “Bay Trail-D” SoCs? [this same blog, Sept 14, 2013]

- How the device play will unfold in the new Microsoft organization? [this same blog, July 14, 2013]

- Microsoft reorg for delivering/supporting high-value experiences/activities [this same blog, July 11, 2013]

- Microsoft partners empowered with ‘cloud first’, high-value and next-gen experiences for big data, enterprise social, and mobility on wide variety of Windows devices and Windows Server + Windows Azure + Visual Studio as the platform [this same blog, July 10, 2013]

- Windows Azure becoming an unbeatable offering on the cloud computing market [this same blog, June 28, 2013]

- Proper Oracle Java, Database and WebLogic support in Windows Azure including pay-per-use licensing via Microsoft + the same Oracle software supported on Microsoft Hyper-V as well [this same blog, June 25, 2013]

- “Cloud first” from Microsoft is ready to change enterprise computing in all of its facets [this same blog, June 4, 2013]

I see, however, particularly challenging the continuation of the Lumia story with the above strategy, as with the previous, combined Ballmer/Elop(Nokia) strategy the results were extremely weak:

Worthwhile to include here the videos Bloomberg was publishing simultaneously with Microsoft Fourth Quarter Earnings Conference Call:

Inside Microsoft’s Secret Surface Labs [Bloomberg News, July 22, 2014]

Will Microsoft Kinect Be a Medical Game-Changer? [Bloomberg News, July 22, 2014]

Why Microsoft Puts GPS In Meat For Alligators [Bloomberg News, July 22, 2014]

To this it is important to add: How Pier 1 is using the Microsoft Cloud to build a better relationship with their customers [Microsoft Server and Cloud YouTube channel, July 21, 2014]

as well as:

Microsoft Surface Pro 3 vs. MacBook Air 13″ 2014 [CNET YouTube channel, July 21, 2014]

Surface Pro 3 vs. MacBook Air (2014) [CTNtechnologynews YouTube channel, July 1, 2014]

In addition here are some explanatory quotes (for the new overall setup of Microsoft) worth to include here from the Q&A part of Microsoft’s (MSFT) CEO Satya Nadella on Q4 2014 Results – Earnings Call Transcript [Seeking Alpha, Jul. 22, 2014 10:59 PM ET]

…

Mark Moerdler – Sanford Bernstein

Thank you. And Amy one quick question, we saw a significant acceleration this quarter in cloud revenue, or I guess Amy or Satya. You saw acceleration in cloud revenue year-over-year what’s – is this Office for the iPad, is this Azure, what’s driving the acceleration and how long do you think we can keep this going?

Mark, I will take it and if Satya wants to add, obviously, he should do that. In general, I wouldn’t point to one product area. It was across Office 365, Azure and even CRM online. I think some of the important dynamics that you could point to particularly in Office 365; I really think over the course of the year, we saw an acceleration in moving the product down the market into increasing what we would call the mid-market and even small business at a pace. That’s a particular place I would tie back to some of the things Satya mentioned in the answer to your first question.

Improvements to analytics, improvements to understanding the use scenarios, improving the product in real-time, understanding trial ease of use, ease of sign-up all of these things actually can afford us the ability to go to different categories, go to different geos into different segments. And in addition, I think what you will see more as we initially moved many of our customers to Office 365, it came on one workload. And I think what we’ve increasingly seen is our ability to add more workloads and sell the entirety of the suite through that process. I also mentioned in Azure, our increased ability to sell some of these higher value services. So while, I can speak broadly but all of them, I think I would generally think about the strength of being both completion of our product suite ability to enter new segments and ability to sell new workloads.

The only thing I would add is it’s the combination of our SaaS like Dynamics in Office 365, a public cloud offering in Azure. But also our private and hybrid cloud infrastructure which also benefits, because they run on our servers, cloud runs on our servers. So it’s that combination which makes us both unique and reinforcing. And the best example is what we are doing with Azure active directory, the fact that somebody gets on-boarded to Office 365 means that tenant information is in Azure AD that fact that the tenant information is in Azure AD is what makes EMS or our Enterprise Mobility Suite more attractive to a customer manager iOS, Android or Windows devices. That network effect is really now helping us a lot across all of our cloud efforts.

…

Keith Weiss – Morgan Stanley

Excellent, thank you for the question and a very nice quarter. First, I think to talk a little bit about the growth strategy of Nokia, you guys look to cut expenses pretty aggressively there, but this is – particularly smartphones is a very competitive marketplace, can you tell us a little bit about sort of the strategy to how you actually start to gain share with Lumia on a going forward basis? And may be give us an idea of what levels of share or what levels of kind unit volumes are you going to need to hit to get to that breakeven in FY16?

Let me start and Amy you can even add. So overall, we are very focused on I would say thinking about mobility share across the entire Windows family. I already talked about in my remarks about how mobility for us even goes beyond devices, but for this specific question I would even say that, we want to think about mobility not just one form factor of a mobile device because I think that’s where the ultimate price is.

But that said, we are even year-over-year basis seen increased volume for Lumia, it’s coming at the low end in the entry smartphone market and we are pleased with it. It’s come in many markets we now have over 10% that’s the first market I would sort of say that we need to track country-by-country. And the key places where we are going to differentiate is looking at productivity scenarios or the digital work and life scenario that we can light up on our phone in unique ways.

When I can take my Office Lens App use the camera on the phone take a picture of anything and have it automatically OCR recognized and into OneNote in searchable fashion that’s the unique scenario. What we have done with Surface and PPI shows us the way that there is a lot more we can do with phones by broadly thinking about productivity. So this is not about just a Word or Excel on your phone, it is about thinking about Cortana and Office Lens and those kinds of scenarios in compelling ways. And that’s what at the end of the day is going to drive our differentiation and higher end Lumia phones.

And Keith to answer your specific question, regarding FY16, I think we’ve made the difficult choices to get the cost base to a place where we can deliver, on the exact scenario Satya as outlined, and we do assume that we continue to grow our units through the year and into 2016 in order to get to breakeven.

…

Rick Sherlund – Nomura

Thanks. I’m wondering if you could talk about the Office for a moment. I’m curious whether you think we’ve seen the worst for Office here with the consumer fall off. In Office 365 growth in margins expanding their – just sort of if you can look through the dynamics and give us a sense, do you think you are actually turned the corner there and we may be seeing the worse in terms of Office growth and margins?

Rick, let me just start qualitatively in terms of how I view Office, the category and how it relates to productivity broadly and then I’ll have Amy even specifically talk about margins and what we are seeing in terms of I’m assuming Office renewals is that probably the question. First of all, I believe the category that Office is in, which is productivity broadly for people, the group as well as organization is something that we are investing significantly and seeing significant growth in.

On one end you have new things that we are doing like Cortana. This is for individuals on new form factors like the phones where it’s not about anything that application, but an intelligent agent that knows everything about my calendar, everything about my life and tries to help me with my everyday task.

On the other end, it’s something like Delve which is a completely new tool that’s taking some – what is enterprise search and making it more like the Facebook news feed where it has a graph of all my artifacts, all my people, all my group and uses that graph to give me relevant information and discover. Same thing with Power Q&A and Power BI, it’s a part of Office 365. So we have a pretty expansive view of how we look at Office and what it can do. So that’s the growth strategy and now specifically on Office renewals.

And I would say in general, let me make two comments. In terms of Office on the consumer side between what we sold on prem as well as the Home and Personal we feel quite good with attach continuing to grow and increasing the value prop. So I think that’s to address the consumer portion.

On the commercial portion, we actually saw Office grow as you said this quarter; I think the broader definition that Satya spoke to the Office value prop and we continued to see Office renewed in our enterprise agreement. So in general, I think I feel like we’re in a growth phase for that franchise.

…

Walter Pritchard – Citigroup

Hi, thanks. Satya, I wanted to ask you about two statements that you made, one around responsibly making the market for Windows Phone, just kind of following on Keith’s question here. And that’s a – it’s a really competitive market it feels like ultimately you need to be a very, very meaningful share player in that market to have value for developer to leverage the universal apps that you’re talking about in terms of presentations you’ve given and build in and so forth.

And I’m trying to understand how you can do both of those things once and in terms of responsibly making the market for Windows Phone, it feels difficult given your nearest competitors there are doing things that you might argue or irresponsible in terms of making their market given that they monetize it in different ways?

Yes. One of beauties of universal Windows app is, it aggregates for the first time for us all of our Windows volume. The fact that even what is an app that runs with a mouse and keyboard on the desktop can be in the store and you can have the same app run in the touch-first on a mobile-first way gives developers the entire volume of Windows which is 300 plus million units as opposed to just our 4% share of mobile in the U.S. or 10% in some country.

So that’s really the reason why we are actively making sure that universal Windows apps is available and developers are taking advantage of it, we have great tooling. Because that’s the way we are going to be able to create the broadest opportunity to your very point about developers getting an ROI for building to Windows. For that’s how I think we will do it in a responsible way.

Heather Bellini – Goldman Sachs

Great. Thank you so much for your time. I wanted to ask a question about – Satya your comments about combining the next version of Windows and to one for all devices and just wondering if you look out, I mean you’ve got kind of different SKU segmentations right now, you’ve got enterprise, you’ve got consumer less than 9 inches for free, the offering that you mentioned earlier that you recently announced. How do we think about when you come out with this one version for all devices, how do you see this changing kind of the go-to-market and also kind of a traditional SKU segmentation and pricing that we’ve seen in the past?

Yes. My statement Heather was more to do with just even the engineering approach. The reality is that we actually did not have one Windows; we had multiple Windows operating systems inside of Microsoft. We had one for phone, one for tablets and PCs, one for Xbox, one for even embedded. So we had many, many of these efforts. So now we have one team with the layered architecture that enables us to in fact one for developers bring that collective opportunity with one store, one commerce system, one discoverability mechanism. It also allows us to scale the UI across all screen sizes; it allows us to create this notion of universal Windows apps and being coherent there.

So that’s what more I was referencing and our SKU strategy will remain by segment, we will have multiple SKUs for enterprises, we will have for OEM, we will have for end-users. And so we will – be disclosing and talking about our SKUs as we get further along, but this my statement was more to do with how we are bringing teams together to approach Windows as one ecosystem very differently than we ourselves have done in the past.

Ed Maguire – CLSA

Hi, good afternoon. Satya you made some comments about harmonizing some of the different products across consumer and enterprise and I was curious what your approach is to viewing your different hardware offerings both in phone and with Surface, how you’re go-to-market may change around that and also since you decided to make the operating system for sub 9-inch devices free, how you see the value proposition and your ability to monetize that user base evolving over time?

Yes. The statement I made about bringing together our productivity applications across work and life is to really reflect the notion of dual use because when I think about productivity it doesn’t separate out what I use as a tool for communication with my family and what I use to collaborate at work. So that’s why having this one team that thinks about outlook.com as well as Exchange helps us think about those dual use. Same thing with files and OneDrive and OneDrive for business because we want to have the software have the smart about separating out the state carrying about IT control and data protection while me as an end user get to have the experiences that I want. That’s how we are thinking about harmonizing those digital life and work experiences.

On the hardware side, we would continue to build hardware that fits with these experiences if I understand your question right, which is how will be differentiate our first party hardware, we will build first party hardware that’s creating category, a good example is what we have done with Surface Pro 3. And in other places where we have really changed the Windows business model to encourage a plethora of OEMs to build great hardware and we are seeing that in fact in this holiday season, I think you will see a lot of value notebooks, you will see clamshells. So we will have the full price range of our hardware offering enabled by this new windows business model.

And I think the last part was how will we monetize? Of course, we will again have a combination, we will have our OEM monetization and some of these new business models are about monetizing on the backend with Bing integration as well as our services attached and that’s the reason fundamentally why we have these zero-priced Windows SKUs today.

…

Microsoft is transitioning to a world with more usage and more software driven value add (rather than the old device driven world) in mobility and the cloud, the latter also helping to grow the server business well above its peers

Quartely Highlights (from Earnings Call Slides):

Cloud momentum helps drive Q3 results

- Outstanding momentum and results in our cloud services; total Commercial Cloud revenue more than doubled again this quarter

- Office 365 Home currently has 4.4 million subscribers, adding nearly one million new users this quarter

- Windows remained the platform of choice for business users, with double-digit increases in both Windows OEM Pro and Windows Volume Licensing revenue

- With focus on spend prioritization, we grew our operating expenses only 2%, contributing to solid earnings growth

Microsoft CEO offer bright future [‘Saxo TV – TradingFloor.com’ YouTube channel, April 25, 2014]

Willing to change, that was the message new Microsoft CEO Satya Nadella was pushing as the firm released third quarter earnings.

Microsoft beat Wall Street analysts’ expectations, driving the company’s stock price up 3 percent on Thursday after earnings were released. Growth came from the company’s surface tablet sales and commercial business sector, according to Norman Young, Senior Equity Analyst at Morningstar. Results were also aided by a less severe decline in the PC industry.

Young believes the company has already demonstrated continued growth for the fourth quarter and remains optimistic about the company’s new direction.

Nadella is shifting the traditionally PC focused company towards more mobile and cloud based technology. On the quarterly call with Wall Street he said, “What you can expect of Microsoft is courage in the face of reality; we will approach our future with a challenger mindset; we will be bold in our innovation.” Analysts are excited about the company’s future trajectory as he continues to push Microsoft’s business into the mobile and cloud computing world.

The company’s stock has increased 8 percent since Nadella assumed the role of CEO in February.

From Earnings Release FY14 Q3 [April 24, 2014]

“This quarter’s results demonstrate the strength of our business, as well as the opportunities we see in a mobile-first, cloud-first world. We are making good progress in our consumer services like Bing and Office 365 Home, and our commercial customers continue to embrace our cloud solutions. Both position us well for long-term growth,” said Satya Nadella, chief executive officer at Microsoft. “We are focused on executing rapidly and delivering bold, innovative products that people love to use.”

…

Devices and Consumer revenue grew 12% to $8.30 billion.

- Windows OEM revenue grew 4%, driven by strong 19% growth in Windows OEM Pro revenue.

- Office 365 Home now has 4.4 million subscribers, adding nearly 1 million subscribers in just three months.

- Microsoft sold in 2.0 million Xbox console units, including 1.2 million Xbox One consoles.

- Surface revenue grew over 50% to approximately $500 million.

- Bing U.S. search share grew to 18.6% and search advertising revenue grew 38%.

Commercial revenue grew 7% to $12.23 billion.

- Office 365 revenue grew over 100%, and commercial seats nearly doubled, demonstrating strong enterprise momentum for Microsoft’s cloud productivity solutions.

- Azure revenue grew over 150%, and the company has announced more than 40 new features that make the Azure platform more attractive to cloud application developers.

- Windows volume licensing revenue grew 11%, as business customers continue to make Windows their platform of choice.

- Lync, SharePoint, and Exchange, our productivity server offerings, collectively grew double-digits.

From Microsoft’s CEO Discusses F3Q 2014 Results – Earnings Call Transcript [Seeking Alpha, April 25, 2014]

From the prepared comments: “This quarter we continued our rapid cadence of innovation and announced a range of new services and features in three key areas – data, cloud, and mobility. SQL Server 2014 helps improve overall performance, and with Power BI, provides an end-to-end solution from data to analytics. Microsoft Azure preview portal provides a fully integrated cloud experience. The Enterprise Mobility Suite provides IT with a comprehensive cloud solution to support bring-your-own-device scenarios. These offerings help businesses convert big data into ambient intelligence, developers more efficiently build and run cloud solutions, and IT manage enterprise mobility with ease.”

Satya Nadella – Chief Executive Officer:

As I have told our employees, our industry does not respect tradition, it only respects innovation. This applies to us and everyone else. When I think about our industry over the next 5, 10 years, I see a world where computing is more ubiquitous and all experiences are powered by ambient intelligence. Silicon, hardware systems and software will co-evolve together and give birth to a variety of new form factors. Nearly everything we do will become more digitized, our interactions with other people, with machines and between machines. The ability to reason over and draw insights from everything that’s been digitized will improve the fidelity of our daily experiences and interactions. This is the mobile-first and cloud-first world. It’s a rich canvas for innovation and a great growth opportunity for Microsoft across all our customer segments.

To thrive we will continue to zero in on the things customers really value and Microsoft can uniquely deliver. We want to build products that people love to use. And as a result, you will see us increasingly focus on usage as the leading indicator of long-term success.

…

- advancing Office, Windows and our data platform

- continue to invest in our cloud capabilities including Office 365 and Azure in the fast growing SaaS and cloud platform markets

- ensuring that our cloud services are available across all device platforms that people use

- delivering a cloud for everyone on every device

- have bold plans to move Windows forward:

– investing and innovating in every dimension from form-factor to software experiences to price

– Windows platform is unique in how it brings together consistent end user experiences across small to large screens, broadest platform opportunity for developers and control and assurance for IT

– enhance our device capabilities with the addition of Nokia’s talented people and their depth in mobile technologies- our vision is about being going boldly into this mobile-first, cloud-first world

…

So this mobile-first cloud-first thing is a pretty deep thing for us. When we say mobile-first, in fact what we mean by that is mobility first. We think about users and their experiences spanning a variety of devices. So it’s not about any one form factor that may have some share position today, but as we look to the future, what are the set of experiences across devices, some ours and some not ours that we can power through experiences that we can create uniquely. …

… When you think about mobility first, that means you need to have really deep understanding of all the mobile scenarios for everything from how communications happen, how meetings occur. And those require us to build new capability. We will do some of this organically, some of it inorganically.

A good example of this is what we have done with Nokia. So we will – obviously we are looking forward to that team joining us building on the capability and then execution, even in the last three weeks or so we have announced a bunch of things where we talked about this one cloud for everyone and every device. We talked about how our data platform is going to enable this data culture, which is in fact fundamentally changing how Microsoft itself works.

We always talked about what it means to think about Windows, especially with the launch of this universal Windows application model. How different it is now to think about Windows as one family, which was not true before, but now we have a very different way to think about it.

…

[Re: Microsoft transition to more of a subscription business]

The way I look at it … we are well on our way to making that transition, in terms of moving from pure licenses to long-term contracts and as well as subscription business model. So when you talked about Platform-as-a-Service if you look at our commercial cloud it’s made up of the platform itself which is Azure. We also have a SaaS business in Office 365.

Now, one of the things that we want to make sure we look at is each of the constituent parts because the margin profile on each one of these things is going to different. The infrastructure elements right now in particular is going to have different economics versus some of the per-user applications in a SaaS mode have. It’s the blending of all of that that matters and the growth of that matters to us the most in this time where I think there is just a couple of us really playing in this market. I mean this is gold rush time in some sense of being able to capitalize on the opportunity.

And when it comes to that we have some of the best, the broadest SaaS solution and the broadest platform solution and that combination of those assets doesn’t come often. So what we are very focused on is how do we make sure we get our customer aggressively into this, having them use our service, be successful with it. And then there will be a blended set of margins across even just our cloud. And what matters to me in the long run is the magnitude of profit we generate given a lot of categories are going to be merged as this transition happens. And we have to be able to actively participate in it and drive profit growth.

…

From the prepared comments: “Office Commercial revenue was up 6%, driven by Office 365 as customers transitioned to our cloud productivity services. Office 365 revenue grew over 100%, and seats nearly doubled as well. Our productivity server offerings continue to perform well, with Lync, SharePoint, and Exchange, collectively growing double-digits.”

… to me the Office 365 growth is in fact driving our enterprise infrastructure growth which is driving Azure growth and that cycle to me is most exciting. So that’s one of the reasons why I want to have to keep indexing on the usage of all of this and the growth numbers you see is a reflection of that.

[Background from him in the call:]

Office 365 I am really, really excited about what’s happening there, which is to me this is the core engine that’s driving a lot of our cloud adoption and you see it in the numbers and Amy will talk more about the numbers. But one of the fundamental things its also doing is it’s actually a SaaS application and it’s also an architecture for enterprises. And one of the most salient things we announced when we talked about the cloud for everyone and every device and we talked about Office 365 having now iPad apps, we also launched something called the enterprise mobility suite which is perhaps one of the most strategic things during that day that we announced which was that we now have a consistent and deep platform for identity management which by the way gets bootstrapped every time Office 365 users sign up, device management and data protection, which is really what every enterprise customer needs in a mobile-first world, in a world where you have SaaS application adoption and you have BYOD or bring your own devices happening.…

[Re #1: about the new world in terms of more usage and more software driven rather than device driven, and the reengagement with the developer community in that world]

Developers are very, very important to us. If you’re in the platform business which we’re on both on the device side as well as on the cloud side, developers and their ability to create new value props and new applications on them is sort of likes itself. I would say couple of things.

…

… on the cloud side, in fact one of the most strategic APIs is the Office API. If you think about building an application for iOS, if you want single sign-on for any enterprise application, it’s the Azure AD single sign-on. That’s one of the things that we showed at Build, which is how to take advantage of list data in Sharepoint, contact information in Exchange, Azure active directory information for log-on. And those are the APIs that are very, very powerful APIs and unique to us. And they expand the opportunity for developers to reach into the enterprises. And then of course Azure is a full platform, which is very attractive to developers. So that gives you a flavor for how important developers are and what your opportunities are.…

From the prepared comments: “Devices and Consumer Other revenue grew 18% to $1.95 billion, driven by search advertising and our Office 365 Home service. Search revenue grew 38%, offset by display [advertising] revenue which declined 24% this quarter. Gross margin grew 26% to $541 million. The combined revenue from Office 365 Home and Office Consumer, reported in the Devices & Consumer Licensing segment, grew 28%. … Office Consumer revenue increased 15% due to higher attach and strong sales in Japan, where we saw customers accelerate some purchases ahead of a national sales tax increase. Excluding that estimated impact, Office still outpaced the underlying consumer PC market.”

[Re: how you could potentially make what has been traditionally a unit model with Windows OEM revenue into something potentially more recurring in nature?]

… the thing I would add is this transition from one time let’s say licenses or device purchases to what is a recurring stream. You see that in a variety of different ways. You have back end subscriptions, in our case, there will be Office 365, there is advertising, there is the app store itself. So these are all things that attach to a device. And so we are definitely going to look to make sure that the value prop that we put together is going to be holistic in its nature and the monetization itself will be holistic and it will increase with the usage of the device across these services. And so that’s the approach we will take.

From the prepared comments: “Zero dollar licensing for sub 9-inch devices helps grow share and creates new opportunities to deliver our services, with minimal short term revenue impact”

[Re: the recent decision to offer Windows for free for sub 9-inch devices and its impact of Microsoft share in that arena, about Windows pricing in general, the kind of play in different market segmentations, and how Windows pricing is evolving]

Overall, the way I want us to look at Windows going forward is what does it mean to have the broadest device family and ecosystem? Because at the end of the day it’s about the users and developer opportunity we create for the entirety of the family. That’s going to define the health of the ecosystem. So, to me, it matters that we approach the various segments that we now participate with Windows, because that’s what has happened. Fundamentally, we participated in the PC market. Now we are in a market that’s much bigger than the PC market. We continue to have healthy share, healthy pricing and in fact growth as we mentioned in the enterprise adoption of Windows.

And that’s we plan to in fact add more value, more management, more security, especially as things are changing in those segments. Given BYOD and software security issues, we want to be able to reinforce that core value, but then when it comes to new opportunities from wearables to internet of things, we want to be able to participate on all of this with our Windows offering, with our tools around it. And we want to be able to price by category. And that’s effectively what we did. We looked at what it makes – made sense for us to do on tablets and phones below 9 inches and we felt that the price there needed to be changed. We have monetization vehicles on the back end for those. And that’s how we are going to approach each one of these opportunities, because in a world of ubiquitous computing, we want Windows to be ubiquitous. That doesn’t mean its one price, one business model for all of that. And it’s actually a market expansion opportunity and that’s the way we are going to go execute on it.

From the prepared comments: “Our universal app development platform is a big step towards enabling developers to engage users across PCs, tablets, and phones with a common set of APIs”

[Re #2: about the new world in terms of more usage and more software driven rather than device driven, and the reengagement with the developer community in that world]

Developers are very, very important to us. If you’re in the platform business which we’re on both on the device side as well as on the cloud side, developers and their ability to create new value props and new applications on them is sort of likes itself. I would say couple of things.

One is the announcements we made at Build on the device side is really our breakthrough worked for us which is we’re the only device platform today that has this notion of building universal apps with fantastic tooling around them. So that means you can target multiple of our devices and have common code across all of them. And this notion of having a Windows universal application help developers leverage them core asset, which is their core asset across this expanded opportunity is huge. There was this one user experience change that Terry Myerson talked about at Build, which expands the ability for anyone who puts up application in Windows Store to be now discovered across even the billion plus PC installed base. And so that’s I think a fantastic opportunity to developers and we are doing everything to make that opportunity clear and recruit developers to do more with Windows. And in that context, we will also support cross platforms. So this has been one of the things that we have done is the relationship with Unity. We have tooling that allows you to have this core library that’s portable. You can bring your code asset. In fact, we are the only client platform that has the abstractions available for the different languages and so on.

From the prepared comments: “Server product revenue grew 10%, driven by demand for our data platform, infrastructure and management offerings, and Azure.”

- “SQL Server revenue grew more than 15%, and continued to outpace the data platform market; we continue to gain share in mission critical workloads”

- “Windows Server Premium and System Center revenue showed continued strength from increased virtualization share and demand for hybrid infrastructure”

[Re: about the factors that have enabled Microsoft to continue growing server business well above its peers, and whether that kind of 10% ish growth is sustainable over fiscal 2015]

It’s a pretty exciting change that’s happening, obviously it’s that part of the business is performing very well for a while now, but quite frankly it’s fundamentally changing. One of the questions I often get asked is hey how did Windows server and the hypervisor underneath it becomes so good so soon. You’ve been at it for a long time but there seems to have something fundamentally changed I mean we’ve grown a lot of share recently, the product is more capable than it ever was, the rate of change is different and for one reason alone which is we use it to run Azure. So the fact that we use our servers to run our cloud makes our servers more competitive for other people to build their own cloud.

So it’s the same trend that’s accelerating us on both sides. The other thing that’s happening is when we sell our server products they for most part are just not isolated anymore. They come with automatic cloud tiering. SQL server is a great example. We just launched a new version of SQL which is by far the best release of SQL in terms of its features like it’s exploitation of in-memory. It’s the first product in the database world that has in-memory for all the three workloads of databases, OLTP, data warehousing and BI. But more importantly it automatically gives you high availability which means a lot to every CIO and every enterprise deployment by actually tiering to the cloud.

From the prepared comments: “Commercial Other revenue grew 31%, to $1.90 billion, driven by Commercial Cloud revenue which exceeded our guidance as customers transitioned to our cloud solutions faster than expected; Gross margin increased 80% as we realized margin expansion through engineering efficiencies and continued scale benefits; Enterprise services revenue grew 8%”

So those kinds of feature innovation which is pretty boundary less for us is breakthrough work. It’s not something that somebody who has been a traditional competitor of ours can do if you’re not even a first class producer of a public cloud service. So I think that we’re in a very unique place. Our ability to deliver this hybrid value proposition and be in a position, where we not only run a cloud service at scale, but we also provide the infrastructure underneath it as the server products to others. That’s what’s driving the growth. The shape of that growth and so on will change over time, but I feel very, very bullish about our ability to continue this innovation.

Microsoft cloud server designs for the Open Compute Project to offer an alternative to the Facebook designs based on a broader set of workloads

… while neither Amazon nor Google publicize their server designs yet

Designing Cloud Infrastructure for 1m+ Server Scale [“cloud scale”] – Kushagra Vaid (General Manager, Cloud Server Engineering, Microsoft) [Open Compute Project YouTube channel, Jan 29, 2014]

“The chassis can take 24 servers”

Microsoft Contributes Cloud Server Specification to Open Compute Project [Microsoft Data Centers Blog, Jan 27, 2014]