Home » servers (Page 2)

Category Archives: servers

AMD’s dense server strategy of mixing next-gen x86 Opterons with 64-bit ARM Cortex-A57 based Opterons on the SeaMicro Freedom™ fabric to disrupt the 2014 datacenter market using open source software (so far)

… so far, as Microsoft was in a “shut-up and ship” mode of operation during 2013 and could deliver its revolutionary Cloud OS with its even more disruptive Big Data solution for x86 only (that is likely to change as 64-bit ARM will be delivered with servers in H2 CY14).

Update: Disruptive Technologies for the Datacenter – Andrew Feldman, GM and CVP, AMD [Open Compute Project, Jan 28, 2014]

Note from the press release given below that: “The AMD Opteron A-Series development kit is packaged in a Micro-ATX form factor”. Take the note of the topmost message: “Optimized for dense compute – High-density, power-sensitive scale-out workloads: web hosting, data analytics, caching, storage”.

AMD to Accelerate the ARM Server Ecosystem with the First ARM-based CPU and Development Platform from a Server Processor Vendor [press release, Jan 28, 2014]

AMD also announced the imminent sampling of the ARM-based processor, named the AMD Opteron™ A1100 Series, and a development platform, which includes an evaluation board and a comprehensive software suite.

This should be the evaluation board for the development platform with imminent sampling.

In addition, AMD announced that it would be contributing to the Open Compute Project a new micro-server design using the AMD Opteron A-Series, as part of the common slot architecture specification for motherboards dubbed “Group Hug.”

| From OCP Summit IV: Breaking Up the Monolith [blog of the Open Compute Project, Jan 16, 2013] … “Group Hug” board: Facebook is contributing a new common slot architecture specification for motherboards. This specification — which we’ve nicknamed “Group Hug” — can be used to produce boards that are completely vendor-neutral and will last through multiple processor generations. The specification uses a simple PCIe x8 connector to link the SOCs to the board. … How does AMD support the Open Compute common slot architecture? [AMD YouTube channel, Oct 3, 2013] Learn more about AMD Open Compute: http://bit.ly/AMD_OpenCompute Dense computing is the latest trend in datacenter technology, and the Open Compute Project is driving standards codenamed Common Slot. In this video, AMD explains Common Slot and how the AMD APU and ARM offerings will power next generation data centers.

See also: Facebook Saved Over A Billion Dollars By Building Open Sourced Servers [TechCrunch, Jan 28, 2014] |

The AMD Opteron A-Series processor, codenamed “Seattle,” will sample this quarter along with a development platform that will make software design on the industry’s premier ARM–based server CPU quick and easy. AMD is collaborating with industry leaders to enable a robust 64-bit software ecosystem for ARM-based designs from compilers and simulators to hypervisors, operating systems and application software, in order to address key workloads in Web-tier and storage data center environments. The AMD Opteron A-Series development platform will be supported by a broad set of tools and software including a standard UEFI boot and Linux environment based on the Fedora Project, a Red Hat-sponsored, community-driven Linux distribution.

…AMD continues to drive the evolution of the open-source data center from vision to reality and bring choice among processor architectures. It is contributing the new AMD Open CS 1.0 Common Slot design based on the AMD Opteron A-Series processor compliant with the new Common Slot specification, also announced today, to the Open Compute Project.

…

AMD announces plans to sample 64-bit ARM Opteron A “Seattle” processors [AMD Blogs > AMD Business, Jan 28, 2014]

AMD’s rich history in server-class silicon includes a number of notable firsts including the first 64-bit x86 architecture and true multi-core x86 processors. AMD adds to that history by announcing that its revolutionary AMD Opteron™ A-series 64-bit ARM processors, codenamed “Seattle,” will be sampling this quarter.

AMD Opteron A-Series processors combine AMD’s expertise in delivering server-class silicon with ARM’s trademark low-power architecture and contributing to the Open Source software ecosystem that is rapidly growing around the ARM 64-bit architecture. AMD Opteron A-Series processors make use of ARM’s 64-bit ARMv8 architecture to provide true server-class features in a power efficient solution.

AMD plans for the AMD Opteron™ A1100 processors to be available in the second half of 2014 with four or eight ARM Cortex A57 cores, up to 4MB of shared Level 2 cache and 8MB of shared Level 3 cache. The AMD Opteron A-Series processor supports up to 128GB of DDR3 or DDR4 ECC memory as unbuffered DIMMs, registered DIMMs or SODIMMs.

The ARMv8 architecture is the first from ARM to have 64-bit support, something that AMD brought to the x86 market in 2003 with the AMD Opteron processor. Not only can the ARMv8-based Cortex A-57 architecture address large pools of memory, it has been designed from the ground up to provide the optimal balance of performance and power efficiency to address the broad spectrum of scale-out data center workloads.

With more than a decade of experience in designing server-class solutions silicon, AMD took the ARM Cortex A57 core, added a server-class memory controller, and included features resulting in a processor that meets the demands of scale-out workloads. A requirement of scale-out workloads is high performance connectivity, and the AMD Opteron A1100 processor has extensive integrated I/O, including eight PCI Express Gen 3 lanes, two 10 GB/s Ethernet and eight SATA 3 ports.

Scale-out workloads are becoming critical building blocks in today’s data centers. These workloads scale over hundreds or thousands of servers, making power efficient performance critical in keeping total cost of ownership (TCO) low. The AMD Opteron A-Series meets the demand of these workloads through intelligent silicon design and by supporting a number of operating system and software projects.

As part of delivering a server-class solution, AMD has invested in the software ecosystem that will support AMD Opteron A-Series processors. AMD is a gold member of the Linux Foundation, the organisation that oversees the development of the Linux kernel, and is a member of Linaro, a significant contributor to the Linux kernel. Alongside collaboration with the Linux Foundation and Linaro, AMD itself is listed as a top 20 contributor to the Linux kernel. A number of operating system vendors have stated they will support the 64-bit ARM ecosystem, including Canonical, Red Hat and SUSE, while virtualization will be enabled through KVM and Xen.

Operating system support is supplemented with programming language support, with Oracle and the community-driven OpenJDK porting versions of Java onto the 64-bit ARM architecture. Other popular languages that will run on AMD Opteron A-Series processors include Perl, PHP, Python and Ruby. The extremely popular GNU C compiler and the critical GNU C Library have already been ported to the 64-bit ARM architecture.

Through the combination of kernel support and development tools such as libraries, compilers and debuggers, the foundation has been set for developers to port applications to a rapidly growing ecosystem.

As AMD Opteron A-Series processors are well suited to web hosting and big data workloads, AMD is a gold sponsor of the Apache Foundation, the organisation that manages the Hadoop and HTTP Server projects. Up and down the software stack, the ecosystem is ready for the data center revolution that will take place when AMD Opteron A-Series are deployed.

Soon, AMD’s partners will start to realise what a true server-class 64-bit ARM processor can do. By using AMD’s Opteron A-Series Development Kit, developers can contribute to the fast growing software ecosystem that already includes operating systems, compilers, hypervisors and applications. Combining AMD’s rich history in designing server-class solutions with ARM’s legendary low-power architecture, the Opteron A-Series ushers in the era of personalised performance.

Introducing the industry’s only 64-bit ARM-based server SoC from AMD [AMD YouTube channel, Jan 21, 2014]

It Begins: AMD Announces Its First ARM Based Server SoC, 64-bit/8-core Opteron A1100 [AnandTech, Jan 28, 2014]

… AMD will be making a reference board available to interested parties starting in March, with server and OEM announcements to come in Q4 of this year.

It’s still too early to talk about performance or TDPs, but AMD did indicate better overall performance than its Opteron X2150 (4-core 1.9GHz Jaguar) at a comparable TDP:

AMD alluded to substantial cost savings over competing Intel solutions with support for similar memory capacities. AMD tells me we should expect a total “solution” price somewhere around 1/10th that of a competing high-end Xeon box, but it isn’t offering specifics beyond that just yet. Given the Opteron X2150 performance/TDP comparison, I’m guessing we’re looking at a similar ~$100 price point for the SoC. There’s also no word on whether or not the SoC will leverage any of AMD’s graphics IP. …

End of Update

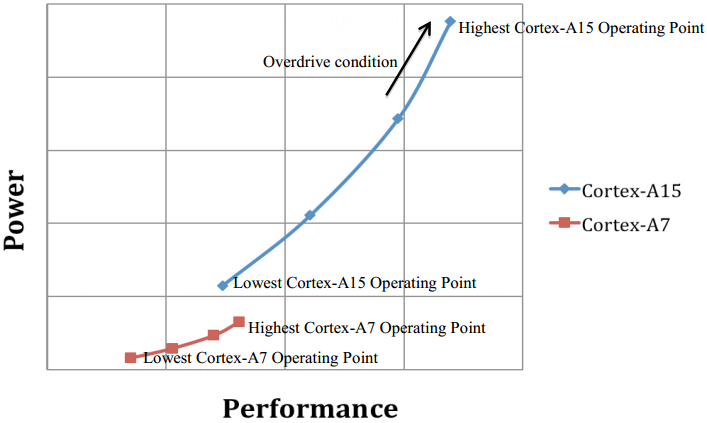

AMD is also in a quite unique market position now as its only real competitor, Calxeda shut down its operation on December 19, 2013 and went into restructuring. The reason for that was lack of further funding by venture capitalists attributed mainly to its initial 32-bit Cortex-A15 based approach and the unwillingness of customers and software partners to port their already 64-bit x86 software back to 32-bit.

With the only remaining competitor in the 64-bit ARM server SoC race so far*, Applied Micro’s X-Gene SoC being built on a purpose built core of its own (see also my Software defined server without Microsoft: HP Moonshot [‘Experiencing the Cloud’, April 10, Dec 6, 2013] post), i.e. with only architecture license taken from ARM Holdings, the volume 64-bit ARM server SoC market starting in 2014 already belongs to AMD. I would base that prediction on the AppliedMicro’s X-Gene: 2013 Year in Review [Dec 20, 2013] post, stating that the first-generation X-Gene product is just nearing volume production, and a pilot X-Gene solution is planned only for early 2014 delivery by Dell.

* There is also Cavium which has too an ARMv8 architecture license only (obtained in August, 2012) but for this the latest information (as of Oct 30, 2013) was that: “In terms of the specific announcement of the product, we want to do it fairly close to silicon. We believe that this is a very differentiated product, and we would like to kind of keep it under the covers as long as we can. Obviously our customers have all the details of the products, and they’re working with them, but on a general basis for competitive reasons, we are kind of keeping this a little bit more quieter than we normally do.”

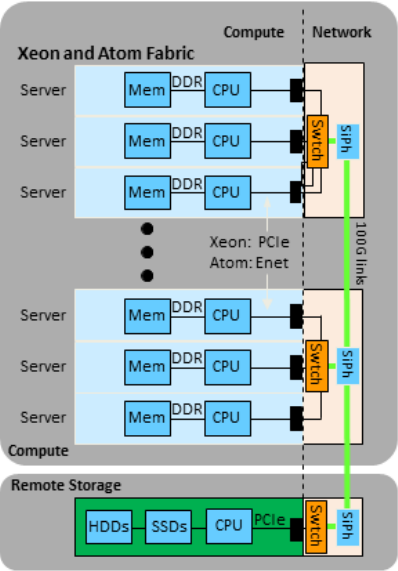

Meanwhile the 64-bit x86 based SeaMicro solution has been on the market since July 30, 2010, after 3 years in development. At the time of SeaMicro acquisition by AMD (Feb 29, 2012) this already represented a quite well thought-out and engineered solution, as one can easily grasp from the information included below:

1. IOVT: I/O-Virtualization Technology

2. TIO: Turn It Off

3. Freedom™ Supercomputer Fabric: 3D torus network fabric

– 8 x 8 x 8 Fabric nodes

– Diameter (max hop) 4 + 4 + 4 = 12

– Theor. cross section bandwidth = 2 (periodic) x 8 x 8 (section) x 2(bidir) x 2.0Gbs/link = 512Gb/s

– Compute, storage, mgmt cards are plugged into the network fabric

– Support for hot plugged compute cards

The first three—IOVT, TIO, and the Freedom™ Supercomputer Fabric—live in SeaMicro’s Freedom™ ASIC. Freedom™ ASICs are paired with each CPU and with DRAM, forming the foundational building block of a SeaMicro system.

4. DCAT: Dynamic Computation-Allocation Technology™

– CPU management and load balancing

– Dynamic workload allocation to specific CPUs on the basis of power-usage metrics

– Users can create pools of compute for a given application

– Compute resources can be dynamically added to the pool based on predefined utilization thresholds

The DCAT technology resides in the SeaMicro system software and custom-designed FPGAs/NPUs, which control and direct the I/O traffic.

More information:

– SeaMicro SM10000-64 Server [SeaMicro presentation on Hot Chips 23, Aug 19, 2011] for slides in PDF format while the presentation itself is the first one in the following recorded video (just the first 20 minutes + 7 minutes of—quite valuable—Q&A following that):

Session 7, Hot Chips 23 (2011), Friday, August 19, 2011. SeaMicro SM10000-64 Server: Building Data Center Servers Using “Cell Phone” Chips Ashutosh Dhodapkar, Gary Lauterbach, Sean Lie, Dhiraj Mallick, Jim Bauman, Sundar Kanthadai, Toru Kuzuhara, Gene Shen, Min Xu, and Chris Zhang, SeaMicro Poulson: An 8-Core, 32nm, Next-Generation Intel Itanium Processor Stephen Undy, Intel T4: A Highly Threaded Server-on-a-Chip with Native Support for Heterogenous Computing Robert Golla and Paul Jordan, Oracle

– SeaMicro Technology Overview [Anil Rao from SeaMicro, January 2012]

– System Overview for the SM10000 Family [Anil Rao from SeaMicro, January 2012]

Note that the above is just for the 1st generation as after the AMD acquisition (Feb 29, 2012) a second generation solution came out with the SM15000 enclosure (Sept 10, 2012 with more info in the details section later), and certainly there will be a 3d generation solution with the integrated into the each of x86 and 64-bit ARM based SoCs coming in 2014.

With the “only production ready, production tested supercompute fabric” (as was touted by Rory Read, CEO of AMD more than a year ago), the SeaMicro Freedom™ now will be integrated into the upcoming 64-bit ARM Cortex-A57 based “Seattle” chips from AMD, sampling in the first quarter of 2014. Consequently I would argue that even the high-end market will be captured by the company. Moreover, I think this will not be only in the SoC realm but in enclosures space as well (although that 3d type of enclosure is still to come), to detriment of HP’s highly marketed Moonshot and CloudSystem initiatives.

Then here are two recent quotes from the top executive duo of AMD showing the importance of their upcoming solution as they view it themselves:

Rory Read – AMD’s President and CEO [Oct 17, 2013]:

In the server market, the industry is at the initial stages of a multiyear transition that will fundamentally change the competitive dynamic. Cloud providers are placing a growing importance on how they get better performance from their datacenters while also reducing the physical footprint and power consumption of their server solution.

Lisa Su – AMD’s Senior Vice President and General Manager, Global Business Units [Oct 17, 2013]:

We are fully top to bottom in 28 nanometer now across all of our products, and we are transitioning to both 20 nanometer and to FinFETs over the next couple of quarters in terms of designs. … [Regarding] the SeaMicro business, we are very pleased with the pipeline that we have there. Verizon was the first major datacenter win that we can talk about publicly. We have been working that relationship for the last two years. …

… We’re very excited about the server space. It’s a very good market. It’s a market where there is a lot of innovation and change. In terms of 64-bit ARM, you will see us sampling that product in the first quarter of 2014. That development is on schedule and we’re excited about that. All of the customer discussions have been very positive and then we will combine both the [?x86 and the?]64-bit ARM chip with our SeaMicro servers that will have full solution as well. You will see SeaMicro plus ARM in 2014.

So I think we view this combination of IP as really beneficial to accelerating the dense server market both on the chip side and then also on the solution side with the customer set.

AMD SeaMicro has been extensively working with key platform software vendors, especially in the open source space:

The current state of that collaboration is reflected in the corresponding numbered sections coming after the detailed discussion (given below before the numbered sections):

- Verizon (as its first big name cloud customer, actually not using OpenStack)

- OpenStack (inc. Rackspace, excl. Red Hat)

- Red Hat

- Ubuntu

- Big Data, Hadoop

So let’s take a detailed look at the major topic:

AMD in the Demo Theater [OpenStack Foundation YouTube channel, May 8, 2013]

Note that the OpenStack Quantum networking project was renamed Neutron after April, 2013. Details on the OpenStack effort will be provided later in the post.

Rory Read – AMD President and CEO [Oct 30, 2012]:

That SeaMicro Freedom™ fabric is ultimately very-very important. It is the only production ready, production tested supercompute fabric on the planet.

Lisa Su – AMD Senior Vice President and General Manager, Global Business Units [Oct 30, 2012]:

The biggest change in the datacenter is that there is no one size fits all. So we will offer ARM-based CPUs with our fabric. We will offer x86-based CPUs with our fabric. And we will also look at opportunities where we can merge the CPU technology together with graphics compute in an APU form-factor that will be very-very good for specific workloads in servers as well. So AMD will be the only company that’s able to offer the full range of compute horsepower with the right workloads in the datacenter.

AMD makes ARM Cortex-A57 64bit Server Processor [Charbax YouTube channel, Oct 30, 2012]

From AMD Changes Compute Landscape as the First to Bridge Both x86 and ARM Processors for the Data Center [press release, Oct 29, 2012]

This strategic partnership with ARM represents the next phase of AMD’s strategy to drive ambidextrous solutions in emerging mega data center solutions. In March, AMD announced the acquisition of SeaMicro, the leader in high-density, energy-efficient servers. With this announcement, AMD will integrate the AMD SeaMicro Freedom fabric across its leadership AMD Opteron x86- and ARM technology-based processors that will enable hundreds, or even thousands of processor clusters to be linked together to provide the most energy-efficient solutions.

AMD ARM Oct 29, 2012 Full length presentation [Manny Janny YouTube channel, Oct 30, 2012]

Rory Read – AMD President and CEO: [3:27] That SeaMicro Freedom™ fabric is ultimately very-very important in this announcement. It is the only production ready, production tested supercompute fabric on the planet. [3:41]

Lisa Su – Senior Vice President and General Manager, Global Business Units: [13:09] The biggest change in the datacenter is that there is no one size fits all. So we will offer ARM-based CPUs with our fabric. We will offer x86-based CPUs with our fabric. And we will also look at opportunities where we can merge the CPU technology together with graphics compute in an APU form-factor that will be very-very good for specific workloads in servers as well. So AMD will be the only company that’s able to offer the full range of compute horsepower with the right workloads in the datacenter [13:41]

From AMD to Acquire SeaMicro: Accelerates Disruptive Server Strategy [press release, Feb 29, 2012]

AMD (NYSE: AMD) today announced it has signed a definitive agreement to acquire SeaMicro, a pioneer in energy-efficient, high-bandwidth microservers, for approximately $334 million, of which approximately $281 million will be paid in cash. Through the acquisition of SeaMicro, AMD will be accelerating its strategy to deliver disruptive server technology to its OEM customers serving cloud-centric data centers. With SeaMicro’s fabric technology and system-level design capabilities, AMD will be uniquely positioned to offer industry-leading server building blocks tuned for the fastest-growing workloads such as dynamic web content, social networking, search and video. …

… “Cloud computing has brought a sea change to the data center–dramatically altering the economics of compute by changing the workload and optimal characteristics of a server,” said Andrew Feldman, SeaMicro CEO, who will become general manager of AMD’s newly created Data Center Server Solutions business. “SeaMicro was founded to dramatically reduce the power consumed by servers, while increasing compute density and bandwidth. By becoming a part of AMD, we will have access to new markets, resources, technology, and scale that will provide us with the opportunity to work tightly with our OEM partners as we fundamentally change the server market.”

ARM TechCon 2012 SoC Partner Panel: Introducing the ARM Cortex-A50 Series [ARMflix YouTube channel, recorded on Oct 30, published on Nov 13, 2012]

** Note that nearly 14 months later, on Dec 19, 2013 Calxeda ran out of its ~$100M venture capital accumulated earlier. As the company was not able to secure further funding it shut down its operation by dismissing most of its employees (except 12 workers serving existing customers) and went into “restructuring” with just putting on their company website: “We will update you as we conclude our restructuring process”. This is despite of the kind of pioneering role the company had, especially with HP’s Moonshot and CloudSystem initiatives, and the relatively short term promise of delivering its server cartridge to HP’s next-gen Moonshot enclosure as was well reflected in my Software defined server without Microsoft: HP Moonshot [‘Experiencing the Cloud’, April 10, Dec 6, 2013] post. The major problem was that “it tried to get to market with 32-bit chip technology, at a time most x86 servers boast 64-bit technology … [and as] customers and software companies weren’t willing to port their software to run on 32-bit systems” – reported the Wall Street Journal. I would also say that AMD’s “only production ready, production tested supercompute fabric on the planet” (see AMD Rory’s statement already given above) with its upcoming “Seattle” 64-bit ARM SoC to be on track for delivery in H2 CY14 was another major reason for the lack of additional venture funds to Calxeda.

AMD’s 64-bit “Seattle” ARM processor brings best of breed hardware and software to the data center [AMD Business blog, Dec 12, 2013]

Going into 2014, the server market is set to face the biggest disruption since AMD launched the 64-bit x86 AMD Opteron™ processor – the first 64-bit x86 processor – in 2003. Processors based on ARM’s 64-bit ARMv8 architecture will start to appear next year, and just like the x86 AMD Opteron™ processors a decade ago, AMD’s ARM 64-bit processors will offer enterprises a viable option for efficiently handling vast amounts of data.

From: AMD Unveils Server Strategy and Roadmap [press release June 18, 2013]

Note that AMD Details Embedded Product Roadmap [press release, Sept, 9, 2013] as well in which there is also a:

|

The AMD Opteron processor came at a time when x86 processors were seen by many as silicon that could only power personal computers, with specialized processors running on architectures such as SPARC™ and Power™ being the ones that were handling server workloads. Back in 2003, the AMD Opteron processor did more than just offer another option, it made the x86 architecture a viable contender in the server market – showing that processors based on x86 architectures could compete effectively against established architectures. Thanks in no small part to the AMD Opteron processor, today the majority of servers shipped run x86 processors.

In 2014, AMD will once again disrupt the datacenter as x86 processors will be joined by those that make use of ARM’s 64-bit architecture. Codenamed “Seattle,” AMD’s first ARM-based Opteron processor will use the ARMv8 architecture, offering low-power processing in the fast growing dense server space.

To appreciate what the first ARM-based AMD Opteron processor is designed to deliver to those wanting to deploy racks of servers, it is important to realize that the ARMv8 architecture offers a clean slate on which to build both hardware and software.

ARM’s ARMv8 architecture is much more than a doubling of word-length from previous generation ARMv7 architecture: it has been designed from the ground-up to provide higher performance while retaining the trademark power efficiencies that everyone has come to expect from the ARM architecture. AMD’s “Seattle” processors will have either four or eight cores, packing server-grade features such as support for up to 128 GB of ECC memory, and integrated 10Gb/sec of Ethernet connectivity with AMD’s revolutionary Freedom™ fabric, designed to cater for dense compute systems.

|

From: AMD Delivers a New Generation of AMD Opteron and Intel Xeon “Ivy Bridge” Processors in its New SeaMicro SM15000 Micro Server Chassis [press release, Sept 10, 2012]

AMD off-chip interconnect fabric IP designed to enable significantly lower TCO • Links hundreds –> thousands of SoC modules • Shares hundreds of TBs storage and virtualizes I/O • 160Gbps Ethernet Uplink • Instruction Set:

Freedom™ ASIC 2.0 – Industry’s only Second Generation Fabric TechnologyThe Freedom™ ASIC is the building block of SeaMicro Fabric Compute Systems, enabling interconnection of energy efficient servers in a 3-dimensional Torus Fabric. The second generation Freedom ASIC includes high performance network interfaces, storage connectivity, and advanced server management, thereby eliminating the need for multiple sets of network adapters, HBAs, cables, and switches. This results in unmatched density, energy efficiency, and lowered TCO. Some of the key technologies in ASIC 2.0 include:

Unified Management – Easily Provision and Manage Servers, Network, and Storage Resources on DemandThe SeaMicro SM15000 implements a rich management system providing unified management of servers, network, and storage. Resources can be rapidly deployed, managed, and repurposed remotely, enabling lights-off data center operations. It offers a broad set of management API including an industry standard CLI, SNMP, IPMI, syslog, and XEN APIs, allowing customers to seamlessly integrate the SeaMicro SM15000 into existing data center management environments.Redundancy and Availability – Engineered from the Ground Up to Eliminate Single Points of FailureThe SeaMicro SM15000 is designed for the most demanding environments, helping to ensure availability of compute, network, storage, and system management. At the heart of the system is the Freedom Fabric, interconnecting all resources in the system, with the ability to sustain multiple points of failure and allow live component servicing. All active components in the system can be configured redundant and are hot-swappable, including server cards, network uplink cards, storage controller cards, system management cards, disks, fan trays, and power supplies. Key resources can also be configured to be protected in the following ways:Compute – A shared spare server can be configured to act as a standby spare for multiple primary servers. In the event of failure, the primary server’s personality, including MAC address, assigned disks, and boot configuration can be migrated to the standby spare and brought back online – ensuring fast restoration of services from a remote location.Network – The highly available fabric ensures network connectivity is maintained between servers and storage in the event of path failure. For uplink high-availability, the system can be configured with multiple uplink modules and port channels providing redundant active/active interfaces.Storage – The highly available fabric ensures that servers can access fabric storage in the event of failures. The fabric storage system also provides an efficient, high utilization optional hardware RAID to protect data in case of disk failure.

|

We realize that having an impressive set of hardware features in the first ARM-based Opteron processors is half of the story, and that is why we are hard at work on making sure the software ecosystem will support our cutting edge hardware. Work on software enablement has been happening throughout the stack – from the UEFI, to the operating system and onto application frameworks and developer tools such as compilers and debuggers. This ensures that the software will be ready for ARM-based servers.

|

AMD developing Linux on ARM at Linaro Connect 2013 [Charbax YouTube channel, March 11, 2013] [Recorded at Linaro Connect Asia 2013, March 4-8, 2013] Dr. Leendert van Doorn, Corporate Fellow at AMD, talks about what AMD does with Linaro to optimize Linux on ARM. He talks about the expectations that AMD has for results to come from Linaro in terms of achieving a better and more fully featured Linux world on ARM, especially for the ARM Cortex-A57 ARMv8 processor that AMD has announced for the server market.

|

AMD’s participation in software projects is well documented, being a gold member of the Linux Foundation, the organization that manages the development of the Linux kernel, and a group member of Linaro. AMD is a gold sponsor of the Apache Foundation, which oversees projects such as Hadoop, HTTP Server and Samba among many others, and the company’s engineers are contributors to the OpenJDK project. This is just a small selection of the work AMD is taking part in, and these projects in particular highlight how important AMD feels that open source software is to the data center, and in particular micro servers, that make use of ARM-based processors.

And running ARM-based processors doesn’t mean giving up on the flexibility of virtual machines, with KVM already ported to the ARMv8 architecture. Another popular hypervisor, Xen, is already available for 32-bit ARM architectures with a 64-bit port planned, ensuring that two popular and highly capable hypervisors will be available.

The Linux kernel has supported 64-bit ARMv8 architecture since Linux 3.7, and a number of popular Linux distributions have already signaled their support for the architecture including Canonical’s Ubuntu and the Red Hat sponsored Fedora distribution. In fact there is a downloadable, bootable Ubuntu distribution available in anticipation for ARMv8-based processors.

It’s not just operating systems and applications that are available. Developer tools such as the extremely popular open source GCC compiler and the vital GNU C Library (Glibc) have already been ported to the ARMv8 architecture and are available for download. With GCC and Glibc good to go, a solid foundation for developers to target the ARMv8 architecture is forming.

All of this work on both hardware and software should shed some light on just how big ARM processors will be in the data center. AMD, an established enterprise semiconductor vendor, is uniquely placed to ship both 64-bit ARMv8 and 64-bit x86 processors that enable “mixed rack” environments. And thanks to the army of software engineers at AMD, as well as others around the world who have committed significant time and effort, the software ecosystem will be there to support these revolutionary processors. 2014 is set to see the biggest disruption in the data center in over a decade, with AMD again at the center of it.

Lawrence Latif is a blogger and technical communications representative at AMD. His postings are his own opinions and may not represent AMD’s positions, strategies or opinions. Links to third party sites, and references to third party trademarks, are provided for convenience and illustrative purposes only. Unless explicitly stated, AMD is not responsible for the contents of such links, and no third party endorsement of AMD or any of its products is implied.

End of AMD’s 64-bit “Seattle” ARM processor brings best of breed hardware and software to the data center [AMD Business blog, Dec 12, 2013]

AMD at ARM Techcon 2013 [Charbax YouTube channel, recorded at the ARM Techcon 2013 (Oct 29-31), published on Dec 25, 2013]

From: Advanced Micro Devices’ CEO Discusses Q3 2013 Results – Earnings Call Transcript [Seeking Alpha, Oct 17, 2013]

Rory Read – President and CEO:

The three step turnaround plan we outlined a year ago to restructure, accelerate and ultimately transform AMD is clearly paying off. We completed the restructuring phase of our plan, maintaining cash at optimal levels and beating our $450 million quarterly operating expense goal in the third quarter. We are now in the second phase of our strategy – accelerating our performance by consistently executing our product roadmap while growing our new businesses to drive a return to profitability and positive free cash flow.

We are also laying the foundation for the third phase of our strategy, as we transform AMD to compete across a set of high growth markets. Our progress on this front was evident in the third quarter as we generated more than 30% of our revenue from our semi-custom and embedded businesses. Over the next two years we will continue to transform AMD to expand beyond a slowing, transitioning PC industry, as we create a more diverse company and look to generate approximately 50% of our revenue from these new high growth markets.

We have strategically targeted that semi-custom, ultra-low power client, embedded, dense server and the professional graphics market where we can offer differentiated products that leverage our APU and graphics IP. Our strategy allows us to continue to invest in the product that will drive growth, while effectively managing operating expenses. …

… Several of our growth businesses passed key milestones in the third quarter. Most significantly, our semi-custom business ramped in the quarter. We successfully shipped millions of units to support Sony and Microsoft, as they prepared to launch their next-generation game consoles. Our game console wins are generating a lot of customer interest, as we demonstrate our ability to design and reliably ramp production on two of the most complex SOCs ever built for high-volume consumer devices. We have several strong semi-custom design opportunities moving through the pipeline as customers look to tap into AMD’s IP, design and integration expertise to create differentiated winning solutions. … it’s our intention to win and mix in a whole set semicustom offerings as we build out this exciting and important new business.

We made good progress in our embedded business in the third quarter. We expanded our current embedded SOC offering and detailed our plans to be the only company to offer both 64-bit x86 and ARM solutions beginning in 2014. We have developed a strong embedded design pipeline which, we expect, will drive further growth for this business across 2014.

We also continue to make steady progress in another of our growth businesses in the third quarter, as we delivered our fifth consecutive quarter of revenue and share growth in the professional graphics area. We believe we can continue to gain share in this lucrative part of the GPU market, based on our product portfolio, design wins [in place] [ph] and enhanced channel programs.

In the server market, the industry is at the initial stages of a multiyear transition that will fundamentally change the competitive dynamic. Cloud providers are placing a growing importance on how they get better performance from their datacenters while also reducing the physical footprint and power consumption of their server solution.

This will become the defining metric of this industry and will be a key growth driver for the market and the new AMD. AMD is leading this emerging trend in the server market and we are committed to defining a leadership position.

Earlier this quarter, we had a significant public endorsement of our dense server strategy as Verizon announced a high performance public cloud that uses our SeaMicro technology and Opteron processor. We remain on track to introduce new, low-power X86 and 64-bit ARM processors next year and we believe we will offer the industry leading ARM-based servers. …

… Two years ago we were 90% to 95% of our business centered over PCs and we’ve launched the clear strategy to diversify our portfolio taking our IT — leadership IT and Graphics and CPU and taking it into adjacent segment where there is high growth for three, five, seven years and stickier opportunities.

We see that as an opportunity to drive 50% or more of our business over that time horizon. And if you look at the results in the third quarter, we are already seeing the benefits of that opportunity with over 30% of our revenue now coming from semi-custom and our embedded businesses.

We see it is an important business in PC, but its time is changing and the go-go era is over. We need to move and attack the new opportunities where the market is going, and that’s what we are doing.

Lisa Su – Senior Vice President and General Manager, Global Business Units:

We are fully top to bottom in 28 nanometer now across all of our products, and we are transitioning to both 20 nanometer and to FinFETs over the next couple of quarters in terms of designs. We will do 20 nanometer first, and then we will go to FinFETs. …

… game console semicustom product is a long life cycle product over five to seven years. Certainly when we look at cost reduction opportunities, one of the important ones is to move technology nodes. So we will in this timeframe certainly move from 28 nanometer to 20 nanometer and now the reason to do that is both for pure die cost savings as well as all the power savings that our customer benefits from. … so expect the cost to go down on a unit basis as we move to 20.

[Regarding] the SeaMicro business, we are very pleased with the pipeline that we have there. Verizon was the first major datacenter win that we can talk about publicly. We have been working that relationship for the last two years. So it’s actually nice to be able to talk about it. We do see it as a major opportunity that will give us revenue potential in 2014. And we continue to see a strong pipeline of opportunities with SeaMicro as more of the datacenter guys are looking at how to incorporate these dense servers into their new cloud infrastructures. …

… As I said the Verizon engagement has lasted over the past two years. So some of the initial deployments were with the Intel processors but we do have significant deployments with AMD Opteron as well. We do see the percentage of Opteron processors increasing because that’s what we’d like to do. …

We’re very excited about the server space. It’s a very good market. It’s a market where there is a lot of innovation and change. In terms of 64-bit ARM, you will see us sampling that product in the first quarter of 2014. That development is on schedule and we’re excited about that. All of the customer discussions have been very positive and then we will combine both the [?x86 and the?]64-bit ARM chip with our SeaMicro servers that will have full solution as well. You will see SeaMicro plus ARM in 2014.

So I think we view this combination of IP as really beneficial to accelerating the dense server market both on the chip side and then also on the solution side with the customer set.

Amazon’s James Hamilton: Why Innovation Wins [AMD SeaMicro YouTube channel, Nov 12, 2012] video which was included into the Headline News and Events section of Volume 1, December 2012 of The Wave Newsletter from AMD SeaMicro with the following intro:

James Hamilton, VP and Distinguished Engineer at Amazon called AMD’s co-announcement with ARM to develop 64-bit ARM technology-based processors “A great day for the server ecosystem.” Learn why and hear what James had to say about what this means for customers and the broader server industry.

AMD Changes Compute Landscape as the First to Bridge Both x86 and ARM Processors for the Data Center [press release, Oct 29, 2012]

Company to Complement x86-based Offerings with New Processors Based on ARM 64-bit Technology, Starting with Server Market

SUNNYVALE, Calif. —10/29/2012

In a bold strategic move, AMD (NYSE: AMD) announced that it will design 64-bit ARM® technology-based processors in addition to its x86 processors for multiple markets, starting with cloud and data center servers. AMD’s first ARM technology-based processor will be a highly-integrated, 64-bit multicore System-on-a-Chip (SoC) optimized for the dense, energy-efficient servers that now dominate the largest data centers and power the modern computing experience. The first ARM technology-based AMD Opteron™ processor is targeted for production in 2014 and will integrate the AMD SeaMicro Freedom™ supercompute fabric, the industry’s premier high-performance fabric.

AMD’s new design initiative addresses the growing demand to deliver better performance-per-watt for dense cloud computing solutions. Just as AMD introduced the industry’s first mainstream 64-bit x86 server solution with the AMD Opteron processor in 2003, AMD will be the only processor provider bridging the x86 and 64-bit ARM ecosystems to enable new levels of flexibility and drive optimal performance and power-efficiency for a range of enterprise workloads.

“AMD led the data center transition to mainstream 64-bit computing with AMD64, and with our ambidextrous strategy we will again lead the next major industry inflection point by driving the widespread adoption of energy-efficient 64-bit server processors based on both the x86 and ARM architectures,” said Rory Read, president and chief executive officer, AMD. “Through our collaboration with ARM, we are building on AMD’s rich IP portfolio, including our deep 64-bit processor knowledge and industry-leading AMD SeaMicro Freedom supercompute fabric, to offer the most flexible and complete processing solutions for the modern data center.”

“The industry needs to continuously innovate across markets to meet customers’ ever-increasing demands, and ARM and our partners are enabling increasingly energy-efficient computing solutions to address these needs,” said Warren East, chief executive officer, ARM. “By collaborating with ARM, AMD is able to leverage its extraordinary portfolio of IP, including its AMD Freedom supercompute fabric, with ARM 64-bit processor cores to build solutions that deliver on this demand and transform the industry.”

The explosion of the data center has brought with it an opportunity to optimize compute with vastly different solutions. AMD is providing a compute ecosystem filled with choice, offering solutions based on AMD Opteron x86 CPUs, new server-class Accelerated Processing Units (APUs) that leverage Heterogeneous Systems Architecture (HSA), and new 64-bit ARM-based solutions.

This strategic partnership with ARM represents the next phase of AMD’s strategy to drive ambidextrous solutions in emerging mega data center solutions. In March, AMD announced the acquisition of SeaMicro, the leader in high-density, energy-efficient servers. With this announcement, AMD will integrate the AMD SeaMicro Freedom fabric across its leadership AMD Opteron x86- and ARM technology-based processors that will enable hundreds, or even thousands of processor clusters to be linked together to provide the most energy-efficient solutions.

“Over the past decade the computer industry has coalesced around two high-volume processor architectures – x86 for personal computers and servers, and ARM for mobile devices,” observed Nathan Brookwood, research fellow at Insight 64. “Over the next decade, the purveyors of these established architectures will each seek to extend their presence into market segments dominated by the other. The path on which AMD has now embarked will allow it to offer products based on both x86 and ARM architectures, a capability no other semiconductor manufacturer can likely match.”

At an event hosted by AMD in San Francisco, representatives from Amazon, Dell, Facebook and Red Hat participated in a panel discussion on opportunities created by ARM server solutions from AMD. A replay of the event can be found here as of 5 p.m. PDT, Oct. 29.

Supporting Resources

- AMD bridges the x86 and ARM ecosystems for the data center announcement press resources

- Follow AMD on Twitter at @AMD

- Follow the AMD and ARM announcement on Twitter at #AMDARM

- Like AMD on Facebook.

AMD SeaMicro SM15000 with Freedom Fabric Storage [AMD YouTube channel, Sept 11, 2012]

AMD Extends Leadership in Data Center Innovation – First to Optimize the Micro Server for Big Data [press release, Sept 10, 2012]

AMD’s SeaMicro SM15000™ Server Delivers Hyper-efficient Compute for Big Data and Cloud Supporting Five Petabytes of Storage; Available with AMD Opteron™ and Intel® Xeon® “Ivy Bridge”/”Sandy Bridge” Processors

SUNNYVALE, Calif. —9/10/2012

AMD (NYSE: AMD) today announced the SeaMicro SM15000™ server, another computing innovation from its Data Center Server Solutions (DCSS) group that cements its position as the technology leader in the micro server category. AMD’s SeaMicro SM15000 server revolutionizes computing with the invention of Freedom™ Fabric Storage, which extends its Freedom™ Fabric beyond the SeaMicro chassis to connect directly to massive disk arrays, enabling a single ten rack unit system to support more than five petabytes of low-cost, easy-to-install storage. The SM15000 server combines industry-leading density, power efficiency and bandwidth with a new generation of storage technology, enabling a single rack to contain thousands of cores, and petabytes of storage – ideal for big data applications like Apache™ Hadoop™ and Cassandra™ for public and private cloud deployments.

AMD’s SeaMicro SM15000 system is available today and currently supports the Intel® Xeon® Processor E3-1260L (“Sandy Bridge”). In November, it will support the next generation of AMD Opteron™ processors featuring the “Piledriver” core, as well as the newly announced Intel Xeon Processor E3-1265Lv2 (“Ivy Bridge”). In addition to these latest offerings, the AMD SeaMicro fabric technology continues to deliver a key building block for AMD’s server partners to build extremely energy efficient micro servers for their customers.

“Historically, server architecture has focused on the processor, while storage and networking were afterthoughts. But increasingly, cloud and big data customers have sought a solution in which storage, networking and compute are in balance and are shared. In a legacy server, storage is a captive resource for an individual processor, limiting the ability of disks to be shared across multiple processors, causing massive data replication and necessitating the purchase of expensive storage area networking or network attached storage equipment,” said Andrew Feldman, corporate vice president and general manager of the Data Center Server Solutions group at AMD. “AMD’s SeaMicro SM15000 server enables companies, for the first time, to share massive amounts of storage across hundreds of efficient computing nodes in an exceptionally dense form factor. We believe that this will transform the data center compute and storage landscape.”

AMD’s SeaMicro products transformed the data center with the first micro server to combine compute, storage and fabric-based networking in a single chassis. Micro servers deliver massive efficiencies in power, space and bandwidth, and AMD set the bar with its SeaMicro product that uses one-quarter the power, takes one-sixth the space and delivers 16 times the bandwidth of the best-in-class alternatives. With the SeaMicro SM15000 server, the innovative trajectory broadens the benefits of the micro server to storage, solving the most pressing needs of the data center.

Combining the Freedom™ Supercompute Fabric technology with the pioneering Freedom™ Fabric Storage technology enables data centers to provide more than five petabytes of storage with 64 servers in a single ten rack unit (17.5 inch tall) SM15000 system. Once these disks are interconnected with the fabric, they are seen and shared by all servers in the system. This approach provides the benefits typically provided by expensive and complex solutions such as network-attached storage and storage area networking with the simplicity and low cost of direct attached storage

“AMD’s SeaMicro technology is leading innovation in micro servers and data center compute,” said Zeus Kerravala, founder and principal analyst of ZK Research. “The team invented the micro server category, was the first to bring small-core servers and large-core servers to market in the same system, the first to market with a second-generation fabric, and the first to build a fabric that supports multiple processors and instruction sets. It is not surprising that they have extended the technology to storage. The bringing together of compute and petabytes of storage demonstrates the flexibility of the Freedom Fabric. They are blurring the boundaries of compute, storage and networking, and they have once again challenged the industry with bold innovation.”

Leaders Across the Big Data Community Agree

Dr. Amr Awadallah, CTO and Founder at Cloudera, the category leader that is setting the standard for Hadoop in the enterprise, observes: “The big data community is hungry for innovations that simplify the infrastructure for big data analysis while reducing hardware costs. As we hear from our vast big data partner ecosystem and from customers using CDH and

Cloudera Enterprise, companies that are seeking to gain insights across all their data want their hardware vendors to provide low cost, high density, standards-based compute that connects to massive arrays of low cost storage. AMD’s SeaMicro delivers on this promise.”

Eric Baldeschwieler, co-founder and CTO of Hortonworks and a pioneer in Hadoop technology, notes: “Petabytes of low cost storage, hyper-dense energy-efficient compute, connected with a supercompute-style fabric is an architecture particularly well suited for big data analytics and Hortonworks Data Platform. At Hortonworks, we seek to make Apache Hadoop easier to use, consume and deploy, which is in line with AMD’s goal to revolutionize and commoditize the storage and processing of big data. We are pleased to see leaders in the hardware community inventing technology that extends the reach of big data analysis.”

Matt Pfeil, co-founder and VP of customer solutions at DataStax, the leader in real-time mission-critical big data platforms, agrees: “At DataStax, we believe that extraordinary databases, such as Cassandra, running mission-critical applications, can be used by nearly every enterprise. To see AMD’s DCSS group bringing together efficient compute and petabytes of storage over a unified fabric in a single low-cost, energy-efficient solution is enormously exciting. The combination of the SM15000 server and best-in-class database, Cassandra, offer a powerful threat to the incumbent makers of both databases and the expensive hardware on which they reside.”

AMD’s SeaMicro SM15000™ Technology

AMD’s SeaMicro SM15000 server is built around the industry’s first and only second-generation fabric, the Freedom Fabric. It is the only fabric technology designed and optimized to work with Central Processor Units (CPUs) that have both large and small cores, as well as x86 and non-x86 CPUs. Freedom Fabric contains innovative technology including:

SeaMicro IOVT (Input/Output Virtualization Technology), which eliminates all but three components from the SeaMicro motherboard – CPU, DRAM, and the ASIC itself – thereby shrinking the motherboard, while reducing power, cost and space;

SeaMicro TIO™ (Turn It Off) technology, which enables further power optimization on the mini motherboard by turning off unneeded CPU and chipset functions. Together, SeaMicro IOVT and TIO technology produce the smallest and most power efficient motherboards available;

Freedom Supercompute Fabric creates a 1.28 terabits-per-second fabric that ties together 64 of the power-optimized mini-motherboards at low latency and low power with massive bandwidth;

SeaMicro Freedom Fabric Storage, which allows the Freedom Supercompute Fabric to extend out of the chassis and across the data center, linking not just components inside the chassis, but those outside as well.

AMD’s SeaMicro SM15000 Server Details

AMD’s SeaMicro SM15000 server will be available with 64 compute cards, each holding a new custom-designed single-socket octal core 2.0/2.3/2.8 GHz AMD Opteron processor based on the “Piledriver” core, for a total of 512 heavy-weight cores per system or 2,048 cores per rack. Each AMD Opteron processor can support 64 gigabytes of DRAM, enabling a single system to handle more than four terabytes of DRAM and over 16 terabytes of DRAM per rack. AMD’s SeaMicro SM15000 system will also be available with a quad core 2.5 GHz Intel Xeon Processor E3-1265Lv2 (“Ivy Bridge”) for 256 2.5 GHz cores in a ten rack unit system or 1,024 cores in a standard rack. Each processor supports up to 32 gigabytes of memory so a single SeaMicro SM15000 system can deliver up to two terabytes of DRAM and up to eight terabytes of DRAM per rack.

AMD’s SeaMicro SM15000 server also contains 16 fabric extender slots, each of which can connect to three different Freedom Fabric Storage arrays with different capacities:

FS 5084-L is an ultra-dense capacity-optimized storage system. It supports up to 84 SAS/SATA 3.5 inch or 2.5 inch drives in 5 rack units for up to 336 terabytes of capacity per-array and over five petabytes per SeaMicro SM15000 system;

FS 2012-L is a capacity-optimized storage system. It supports up to 12 3.5 inch or 2.5 inch drives in 2 rack units for up to 48 terabytes of capacity per-array or up to 768 terabytes of capacity per SeaMicro SM15000 system;

FS 2024-S is a performance-optimized storage system. It supports up to 24 2.5 inch drives in 2 rack units for up to 24 terabytes of capacity per-array or up to 384 terabytes of capacity per SM15000 system.

In summary, AMD’s SeaMicro SM15000 system:

- Stands ten rack units or 17.5 inches tall;

- Contains 64 slots for compute cards for AMD Opteron or Intel Xeon processors;

- Provides up to ten gigabits per-second of bandwidth to each CPU;

- Connects up to 1,408 solid state or hard drives with Freedom Fabric Storage

- Delivers up to 16 10 GbE uplinks or up to 64 1GbE uplinks;

- Runs standard off-the-shelf operating systems including Windows®, Linux, Red Hat and VMware and Citrix XenServer hypervisors.

Availability

AMD’s SeaMicro SM15000 server with Intel’s Xeon Processor E3-1260L “Sandy Bridge” is now generally available in the U.S and in select international regions. Configurations based on AMD Opteron processors and Intel Xeon Processor E3-1265Lv2 with the “Ivy Bridge” microarchitecture will be available in November, 2012. More information on AMD’s revolutionary SeaMicro family of servers can be found at www.seamicro.com/products.

1. Verizon

Verizon Cloud on AMD’s SeaMicro SM15000 [AMD YouTube channel, Oct 7, 2013]

Verizon Cloud Compute and Verizon Cloud Storage [The Wave Newsletter from AMD, December 2013]

With enterprise adoption of public cloud services at 10 percent1, Verizon identified a need for a cloud service that was secure, reliable and highly flexible with enterprise-grade performance guarantees. Large, global enterprises want to take advantage of the agility, flexibility and compelling economics of the public cloud, but the performance and reliability are not up to par for their needs. To fulfill this need, Verizon spent over two years identifying and developing software using AMD’s SeaMicro SM15000, the industry’s first and only programmable server hardware. The new services redefine the benchmarks for public cloud computing and storage performance and security.

Designed specifically for enterprise customers, the new services allow companies to use the same policies and procedures across the enterprise network and the public cloud. The close collaboration has resulted in cloud computing services with unheralded performance level guarantees that are offered with competitive pricing. The new cloud services are backed by the power of Verizon, including global data centers, global IP network and enterprise-grade managed security services. The performance and security innovations are expected to accelerate public cloud adoption by the enterprise for their mission critical applications. more >

Verizon Selects AMD’s SeaMicro SM15000 for Enterprise Class Services: Verizon Cloud Compute and Verizon Cloud Storage [AMD-Seamicro press release, Oct 7, 2013]

Verizon and AMD create technology that transforms the public cloud, delivering the industry’s most advanced cloud capabilities

SUNNYVALE, Calif. —10/7/2013

AMD (NYSE: AMD) today announced that Verizon is deploying SeaMicro SM15000™ servers for its new global cloud platform and cloud-based object storage service, whose public beta was recently announced. AMD’s SeaMicro SM15000 server links hundreds of cores together in a single system using a fraction of the power and space of traditional servers. To enable Verizon’s next generation solution, technology has been taken one step further: Verizon and AMD co-developed additional hardware and software technology on the SM15000 server that provides unprecedented performance and best-in-class reliability backed by enterprise-level service level agreements (SLAs). The combination of these technologies co-developed by AMD and Verizon ushers in a new era of enterprise-class cloud services by enabling a higher level of control over security and performance SLAs. With this technology underpinning the new Verizon Cloud Compute and Verizon Cloud Storage, enterprise customers can for the first time confidently deploy mission-critical systems in the public cloud.

“We reinvented the public cloud from the ground up to specifically address the needs of our enterprise clients,” said John Considine, chief technology officer at Verizon Terremark. “We wanted to give them back control of their infrastructure – providing the speed and flexibility of a generic public cloud with the performance and security they expect from an enterprise-grade cloud. Our collaboration with AMD enabled us to develop revolutionary technology, and it represents the backbone of our future plans.”

As part of its joint development, AMD and Verizon co-developed hardware and software to reserve, allocate and guarantee application SLAs. AMD’s SeaMicro Freedom™ fabric-based SM15000 server delivers the industry’s first and only programmable server hardware that includes a high bandwidth, low latency programmable interconnect fabric, and programmable data and control plane for both network and storage traffic. Leveraging AMD’s programmable server hardware, Verizon developed unique software to guarantee and deliver reliability, unheralded performance guarantees and SLAs for enterprise cloud computing services.

“Verizon has a clear vision for the future of the public cloud services—services that are more flexible, more reliable and guaranteed,” said Andrew Feldman, corporate vice president and general manager, Server, AMD. “The technology we developed turns the cloud paradigm upside down by creating a service that an enterprise can configure and control as if the equipment were in its own data center. With this innovation in cloud services, I expect enterprises to migrate their core IT services and mission critical applications to Verizon’s cloud services.”

“The rapid, reliable and scalable delivery of cloud compute and storage services is the key to competing successfully in any cloud market from infrastructure, to platform, to application; and enterprises are constantly asking for more as they alter their business models to thrive in a mobile and analytic world,” said Richard Villars, vice president, Datacenter & Cloud at IDC. “Next generation integrated IT solutions like AMD’s SeaMicro SM15000 provide a flexible yet high-performance platform upon which companies like Verizon can use to build the next generation of cloud service offerings.”

Innovative Verizon Cloud Capabilities on AMD’s SeaMicro SM15000 Server Industry Firsts

Verizon leveraged the SeaMicro SM15000 server’s ability to disaggregate server resources to create a cloud optimized for computing and storage services. Verizon and AMD’s SeaMicro engineers worked for over two years to create a revolutionary public cloud platform with enterprise class capabilities.

These new capabilities include:

- Virtual machine server provisioning in seconds, a fraction of the time of a legacy public cloud;

- Fine-grained server configuration options that match real life requirements, not just small, medium, large sizing, including processor speed (500 MHz to 2,000 MHz) and DRAM (.5 GB increments) options;

- Shared disks across multiple server instances versus requiring each virtual machine to have its own dedicated drive;

- Defined storage quality of service by specifying performance up to 5,000 IOPS to meet the demands of the application being deployed, compared to best-effort performance;

- Consistent network security policies and procedures across the enterprise network and the public cloud;

- Strict traffic isolation, data encryption, and data inspection with full featured firewalls that achieve Department of Defense and PCI compliance levels;

- Guaranteed network performance for every virtual machine with reserved network performance up to 500 Mbps compared to no guarantees in many other public clouds.

The public beta for Verizon Cloud will launch in the fourth quarter. Companies interested in becoming a beta customer can sign up through the Verizon Enterprise Solutions website: www.verizonenterprise.com/verizoncloud.

AMD’s SeaMicro SM15000 Server

AMD’s SeaMicro SM15000 system is the highest-density, most energy-efficient server in the market. In 10 rack units, it links 512 compute cores, 160 gigabits of I/O networking, more than five petabytes of storage with a 1.28 terabyte high-performance supercompute fabric, called Freedom™ Fabric. The SM15000 server eliminates top-of-rack switches, terminal servers, hundreds of cables and thousands of unnecessary components for a more efficient and simple operational environment.

AMD’s SeaMicro server product family currently supports the next generation AMD Opteron™ (“Piledriver”) processor, Intel® Xeon® E3-1260L (“Sandy Bridge”) and E3-1265Lv2 (“Ivy Bridge”) and Intel® Atom™ N570 processors. The SeaMicro SM15000 server also supports the Freedom Fabric Storage products, enabling a single system to connect with more than five petabytes of storage capacity in two racks. This approach delivers the benefits of expensive and complex solutions such as network attached storage (NAS) and storage area networking (SAN) with the simplicity and low cost of direct attached storage.

For more information on the Verizon Cloud implementation, please visit: www.seamicro.com/vzcloud.

About AMD

AMD (NYSE: AMD) designs and integrates technology that powers millions of intelligent devices, including personal computers, tablets, game consoles and cloud servers that define the new era of surround computing. AMD solutions enable people everywhere to realize the full potential of their favorite devices and applications to push the boundaries of what is possible. For more information, visit www.amd.com.

correction…Verizon is not using OpenStack, but they are using our hardware.

@cloud_attitude

2. OpenStack

OpenStack 101 – What Is OpenStack? [Rackspace YouTube channel, Jan 14, 2013]

OpenStack: The Open Source Cloud Operating System

- OpenStack Shared Services (identity, image, telemetry, orchestration): code-named Keystone (OpenStack Identity), code-named Glance (OpenStack Image Service), code-named Ceilometer (OpenStack Metering), code-named Heat (OpenStack Orchestration)

- Compute: code-named Nova

- Networking: the original Quantum naming had to be phased out in April, 2013 and in June, 2013 was replaced by Neutron

- Storage: code-named Cinder (OpenStack Block Storage), code-named Swift (OpenStack Object Storage)

- Projects incubated in the upcoming Icehouse release started on Nov 7, 2013 and planned for release on April 17, 2014:

- Database Service (Trove) – https://wiki.openstack.org/wiki/Trove

- Bare Metal (Ironic) – https://wiki.openstack.org/wiki/Ironic

- Queue Service (Marconi) – https://wiki.openstack.org/wiki/Marconi

- Data Processing (Savannah) – https://wiki.openstack.org/wiki/Savanna

Why OpenStack? [The Wave Newsletter from AMD, December 2013]

OpenStack continues to gain momentum in the market as more and more, larger, established technology and service companies move from evaluation to deployment. But why has OpenStack become so popular? In this issue, we discuss the business drivers behind the widespread adoption and why AMD’s SeaMicro SM15000 server is the industry’s best choice for a successful OpenStack deployment. If you’re considering OpenStack, learn about the options and hear winning strategies from experts featured in our most recent OpenStack webcasts. And in case you missed it, read about AMD’s exciting collaboration with Verizon enabling them to offer enterprise-caliber cloud services. more >

OpenStack the SeaMicro SM15000 – From Zero to 2,048 Cores in Less than One Hour [The Wave Newsletter from AMD, March 2013]

The SeaMicro SM15000 is optimized for OpenStack, a solution that is being adopted by both public and private cloud operators. Red 5 Studios recently deployed OpenStack on a 48 foot bus to power their new massive multiplayer online game Firefall. The SM15000 uniquely excels for object storage, providing more than 5 petabytes of direct attached storage in two data center racks. more >

State of the Stack [OpenStack Foundation YouTube channel, recorded on Nov 8 under official title “Stack Debate: Understanding OpenStack’s Future”, published on Nov 9, 2013]

The biggest issue with OpenStack project which “started without a benevolent dictator and/or architect” was mentioned there (watch from [6:40]) as a kind of: “The worst architectural decision you can make is stay with default networking for a production system because the default networking model in OpenStack is broken for use at scale”.

Then Randy Bias summarized that particular issue later in Neutron in Production: Work in Progress or Ready for Prime Time? [Cloudscaling blog, Dec 6, 2013] as:

Ultimately, it’s unclear whether all networking functions ever will be modeled behind the Neutron API with a bunch of plug-ins. That’s part of the ongoing dialogue we’re having in the community about what makes the most sense for the project’s future.

The bottom-line consensus was is that Neutron is a work in progress. Vanilla Neutron is not ready for production, so you should get a vendor if you need to move into production soon.

AMD’s SeaMicro SM15000 Is the First Server to Provide Bare Metal Provisioning to Scale Massive OpenStack Compute Deployments [press release, Nov 5, 2013]

Provides Foundation to Leverage OpenStack Compute for Large Networks of Virtualized and Bare Metal Servers

SUNNYVALE, Calif. and Hong Kong, OpenStack Summit —11/5/2013

AMD (NYSE: AMD) today announced that the SeaMicro SM15000™ server supports bare metal features in OpenStack® Compute. AMD’s SeaMicro SM15000 server is ideally suited for massive OpenStack deployments by integrating compute, storage and networking into a 10 rack unit system. The system is built around the Freedom™ fabric, the industry’s premier supercomputing fabric for scale out data center applications. The Freedom fabric disaggregates compute, storage and network I/O to provide the most flexible, scalable and resilient data center infrastructure in the industry. This allows customers to match the compute performance, storage capacity and networking I/O to their application needs. The result is an adaptive data center where any server can be mapped to any hard disk/SSD or network I/O to expand capacity or recover from a component failure.

“OpenStack Compute’s bare metal capabilities provide the scalability and flexibility to build and manage large-scale public and private clouds with virtualized and dedicated servers,” said Dhiraj Mallick, corporate vice president and general manager, Data Center Server Solutions, at AMD. “The SeaMicro SM15000 server’s bare metal provisioning capabilities should simplify enterprise adoption of OpenStack and accelerate mass deployments since not all work loads are optimized for virtualized environments.”

Bare metal computing provides more predictable performance than a shared server environment using virtual servers. In a bare metal environment there are no delays caused by different virtual machines contending for shared resources, since the entire server’s resources are dedicated to a single user instance. In addition, in a bare metal environment the performance penalty imposed by the hypervisor is eliminated, allowing the application software to make full use of the processor’s capabilities

In addition to leading in bare metal provisioning, AMD’s SeaMicro SM15000 server provides the ability to boot and install a base server image from a central server for massive OpenStack deployments. A cloud image containing the KVM, the OpenStack Compute image and other applications can be configured by the central server. The coordination and scheduling of this workflow can be managed by Heat, the orchestration application that manages the entire lifecycle of an OpenStack cloud for bare metal and virtual machines.

Supporting Resources

- Visit the AMD SeaMicro site

- Follow AMD on Twitter @seamicroinc

Scalable Fabric-based Object Storage with the SM15000 [The Wave Newsletter from AMD, March 2013]

The SeaMicro SM15000 is changing the economics of deploying object storage, delivering the storage of unprecedented amounts of data while using 1/2 the power and 1/3 the space of traditional servers. more >

SwiftStack with OpenStack Swift Overview [SwiftStack YouTube channel, Oct 4, 2012]

AMD’s SeaMicro SM15000 Server Achieves Certification for Rackspace Private Cloud, Validated for OpenStack [press release, Jan 30, 2013]

Providing unprecedented computing efficiency for “Nova in a Box” and object storage capacity for “Swift in a Rack”

3. Red Hat

OpenStack + SM15000 Server = 1,000 Virtual Machines for Red Hat [The Wave Newsletter from AMD, June 2013]

Red Hat deploys one SM15000 server to quickly and cost effectively build out a high capacity server cluster to meet the growing demands for OpenShift demonstrations and to accelerate sales. Red Hat OpenShift, which runs on Red Hat OpenStack, is Red Hat’s cloud computing Platform-as-a-Service (PaaS) offering. The service provides built-in support for nearly every open source programming language, including Node.js, Ruby, Python, PHP, Perl, and Java. OpenShift can also be expanded with customizable modules that allow developers to add other languages.

more >

Red Hat Enterprise Linux OpenStack Platform: Community-invented, Red Hat-hardened [RedHatCloud YouTube channel, Aug 5, 2013]

AMD’s SeaMicro SM15000 Server Achieves Certification for Red Hat OpenStack [press release, June 12, 2013]

BOSTON – Red Hat Summit —6/12/2013

AMD (NYSE: AMD) today announced that its SeaMicro SM15000™ server is certified for Red Hat® OpenStack, and that the company has joined the Red Hat OpenStack Cloud Infrastructure Partner Network. The certification ensures that the SeaMicro SM15000 server provides a rigorously tested platform for organizations building private or public cloud Infrastructure as a Service (IaaS), based on the security, stability and support available with Red Hat OpenStack. AMD’s SeaMicro solutions for OpenStack include “Nova in a Box” and “Swift in a Rack” reference architectures that have been validated to ensure consistent performance, supportability and compatibility.

The SeaMicro SM15000 server integrates compute, storage and networking into a compact, 10 RU (17.5 inches) form factor with 1.28 Tbps supercompute fabric. The technology enables users to install and configure thousands of computing cores more efficiently than any other server. Complex time-consuming tasks are completed within minutes due to the integration of compute, storage and networking. Operational fire drills, such as setting up servers on short notice, manually configuring hundreds of machines and re-provisioning the network to optimize traffic are all handled through a single, easy-to-use management interface.

“AMD has shown leadership in providing a uniquely differentiated server for OpenStack deployments, and we are excited to have them as a seminal member of the Red Hat OpenStack Cloud Infrastructure Partner Network,” said Mike Werner, senior director, ISV and Developer Ecosystems at Red Hat. “The SeaMicro server is an example of incredible innovation, and I am pleased that our customers will have the SM15000 system as an option for energy-efficient, dense computing as part of the Red Hat Certified Solution Marketplace.”

AMD’s SeaMicro SM15000 system is the highest-density, most energy-efficient server in the market. In 10 rack units, it links 512 compute cores, 160 gigabits of I/O networking and more than five petabytes of storage with a 1.28 Terabits-per-second high-performance supercompute fabric, called Freedom™ Fabric. The SM15000 server eliminates top-of-rack switches, terminal servers, hundreds of cables and thousands of unnecessary components for a more efficient and simple operational environment.

“We are excited to be a part of the Red Hat OpenStack Cloud Infrastructure Partner Network because the company has a strong track record of bridging the communities that create open source software and the enterprises that use it,” said Dhiraj Mallick, corporate vice president and general manager, Data Center Server Solutions, AMD. “As cloud deployments accelerate, AMD’s certified SeaMicro solutions ensure enterprises are able realize the benefits of increased efficiency and simplified operations, providing them with a competitive edge and the lowest total cost of ownership.”

AMD’s SeaMicro server product family currently supports the next-generation AMD Opteron™ (“Piledriver”) processor, Intel® Xeon® E3-1260L (“Sandy Bridge”) and E3-1265Lv2 (“Ivy Bridge”) and Intel® Atom™ N570 processors. The SeaMicro SM15000 server also supports the Freedom Fabric Storage products, enabling a single system to connect with more than five petabytes of storage capacity in two racks. This approach delivers the benefits of expensive and complex solutions such as network attached storage (NAS) and storage area networking (SAN) with the simplicity and low cost of direct attached storage.

4. Ubuntu

Ubuntu Server certified hardware SeaMicro [one of Ubuntu certification pages]

Canonical works closely with SeaMicro to certify Ubuntu on a range of their hardware.

The following are all Certified. More and more devices are being added with each release, so don’t forget to check this page regularly.

Ubuntu on SeaMicro SM15000-OP | Ubuntu [Sept 1, 2013]

Ubuntu on SeaMicro SM15000-XN | Ubuntu [Oct 1, 2013]

Ubuntu on SeaMicro SM15000-XH | Ubuntu [Dec 18, 2013]

Ubuntu OIL announced for broadest set of cloud infrastructure options [Ubuntu Insights, Nov 5, 2013]

Today at the OpenStack Design Summit in Hong Kong, we announced the Ubuntu OpenStack Interoperability Lab (Ubuntu OIL). The programme will test and validate the interoperability of hardware and software in a purpose-built lab, giving Ubuntu OpenStack users the reassurance and flexibility of choice.

We’re launching the programme with many significant partners onboard, such as; Dell, EMC, Emulex, Fusion-io, HP, IBM, Inktank/Ceph, Intel, LSi, Open Compute, SeaMicro, VMware.

The OpenStack ecosystem has grown rapidly giving businesses access to a huge selection of components for their cloud environments. Most will expect that, whatever choices they make or however complex their requirements, the environment should ‘just work’, where any and all components are interoperable. That’s why we created the Ubuntu OpenStack Interoperability Lab.

Ubuntu OIL is designed to offer integration and interoperability testing as well as validation to customers, ISVs and hardware manufacturers. Ecosystem partners can test their technologies’ interoperability with Ubuntu OpenStack and a range of software and hardware, ensuring they work together seamlessly as well as with existing processes and systems. It means that manufacturers can get to market faster and with less cost, while users can minimise integration efforts required to connect Ubuntu OpenStack with their infrastructure.

Ubuntu is about giving customers choice. Over the last releases, we’ve introduced new hypervisors, and software-defined networking (SDN) stacks, and capabilities for workloads running on different types of public cloud options. Ubuntu OIL will test all of these options as well as other technologies to ensure Ubuntu OpenStack offers the broadest set of validated and supported technology options compatible with user deployments. Ubuntu OIL will test and validate for all supported and future releases of Ubuntu, Ubuntu LTS and OpenStack.

Involvement in the lab is through our Canonical Partner Programme. New partners can sign up here.

Learn more about Ubuntu OIL

5. Big Data, Hadoop

Storing Big Data – The Rise of the Storage Cloud [The Wave Newsletter from AMD, December 2012]

Data is everywhere and growing at unprecedented rates. Each year, there are over one hundred million new Internet users generating thousands of terabytes of data every day. Where will all this data be stored? more >

AMD’s SeaMicro SM15000 Achieves Certification for CDH4, Cloudera’s Distribution Including Apache Hadoop Version 4 [press release, March 20, 2013]

“Hadoop-in-a-Box” package accelerates deployments by providing 512 cores and over five petabytes in two racks

The Hidden Truth: Hadoop is a Hardware Investment [The Wave Newsletter from AMD, September 2013]

Apache Hadoop is a leading software application for analyzing big data, but its performance and reliability are tied to a company’s underlying server architecture. Learn how AMD’s SeaMicro SM15000™ server compares with other minimum scale deployments. more >

Intel CEO (Krzanich) and president (James) combo to assure manufacturing and next-gen cross-platform lead